Intel's Xeon Phi Uptake Measured from Real Users

Introduction

LSU announced yesterday that they received a $4m grant from the NSF to deploy a cluster rich in Intel's Xeon Phi (MIC) coprocessor cards, and that 40% of this machine will become available for XSEDE users through the XSEDE research allocation committee review process. I'm personally excited that LSU is getting back into XSEDE not only because they are throwing more cycles into the pile, but because the potential for high impact is greater there than in other regions that already have a rich scientific and cyberinfrastructural research base.However, SuperMIC was pitched as a machine that is extremely heavy in its use of accelerators like Xeon Phi and NVIDIA Kepler GPUs to boast an impressive petaflop-level aggregate performance. While I've made no bones about how I see GPGPUs in the future of supercomputing, Xeon Phi has looked like a nicer alternative because it can use standard APIs like MPI and OpenMP and, ostensibly, requires minimal modification of code to utilize the accelerator. A discussion of if this ease-of-use is actually realized by users was sparked among some colleagues and me yesterday, and I was left wondering: are people actually using Xeon Phi processors for research, or has uptake been inflated by marketing?

Before I continue with the answer though, I need to make the following disclaimer:

This data and the conclusions therein are derived purely from publicly available data. The interpretations are solely my own and do not necessarily represent the views of my employer or the National Science Foundation. I did this analysis with neither the blessing nor knowledge of either my employer or TACC, so within the context of the following post, I am just some guy who scraped some data off the internet.

With that being said, some of the folks at TACC were kind enough to provide some additional insight into interpreting the data. A week after initially posting this, I edited it to reflect new information.

Premise

TACC's Stampede supercomputer was really the flagship large-scale deployment of Intel's Xeon Phi (MIC) coprocessors for production research, and by virtue of the fact that almost all of its cycles are provided through XSEDE, anyone can view some pretty detailed workload statistics from it via XDMoD, XSEDE's technology auditing service.A nice feature of Stampede is that it has a number of queues, namely the normal and normal-mic queues, that encompass mostly the same sets of nodes but allow users to specify whether or not their jobs will absolutely require nodes that have MIC coprocessors. The physical nodes in each queue are the same, so looking at the jobs submitted to the normal queue versus the normal-mic queue should give a reasonable indication of how many users are actually using the 6,440 Xeon Phi coprocessor cards (5,560 single-MIC nodes + 440 dual-MIC nodes) that are currently (as of this posting) in production on Stampede.

Because most nodes have only one coprocessor card per node (as opposed to more accelerator-rich systems like NICS' Beacon, NICS/GA Tech's Keeneland, or Tokyo Tech's Tsubame2) it attracts both regular supercomputing users and those who want to use MICs. In addition, Stampede's MICs are "free" in that users don't get charged extra time if they use the MIC present on every node. Thus, the workload experienced on Stampede should capture a good representation of the greater HPC user community since it doubles as a "conventional" supercomputer as well as one of few large deployments of MICs.

Update (10/1): There has a little bit of confusion as to what the data below is actually representing, so for the sake of clarity, I should highlight two major caveats:

- Judging MIC usage by the queue to which jobs are submitted is not rigorous. This essentially relies on users self-identifying their workload, since (a) jobs in the normal queue can utilize the MICs transparently if they are compiled against the MIC-enabled MKL, and (b) there's no penalty to prevent users from submitting non-MIC jobs to the normal-mic queue. In addition, as +Bill Barth mentioned in his comment below, the development queue supports both MIC and non-MIC jobs but provides no public-facing means to tell what job is doing what.

- Although they were integrated on the production compute nodes, Stampede's MICs didn't actually pass acceptance testing until August 2013--eight months after Stampede opened to the public. Any user could use the MICs on his or her node, but the MICs were not considered to be in production.

Method

Getting these numbers from XSEDE's XDMoD service requires a little bit of work, and unfortunately XDMoD makes it difficult to share links to dynamic plots it can generate. If you'd like to get an idea of how I generated the first few figures I'll present, you can access the data by following these steps:- Visit the XDMoD website (https://xdmod.ccr.buffalo.edu/)

- At the very top left, click over to the Usage tab

- In the left pane, "Jobs Summary" will be expanded by default. Collapse it, then expand the "Jobs by Resource" tree

- Click "CPU Hours Total" (this is what I'll show first)

- Click the bar for Stampede on the plot that comes up. You will get a context menu that lets you drill down to Queue

You should now see all of Stampede's queues and how many CPU hours have been charged to each one. The time period being reported is the previous month by default; there is an option along one of the top bars to change this.

In the following discussion, it is important to note that a "core hour" is defined as the use of a single Intel Xeon E5-2680 core AND a proportional fraction of the Intel Xeon Phi coprocessor available on each node (if available). At present, XSEDE users get MIC time for free and are only charged for the core hours delivered by the CPUs on each node, so a job that charges sixteen core hours may or may not use the MIC; those sixteen core hours are equivalent regardless.

Absolute Numbers

I think the easiest way to determine if researchers are actually using the Xeon Phi coprocessors in Stampede is to just look at the total number of core-hours charged during Stampede's entire deployment in January 2013:

Upon noting that the above plot represents usage on a log scale, it should become evident that there is a difference of over two orders of magnitude between the time charged by jobs that do not use MICs and the jobs that do. The exact numbers, also available through XDMoD, are

- 286,179,494 core hours charged by jobs that don't use MICs

- 1,548,250 core hours charged by jobs that need a single MIC, plus up to 2,035,036 from the development queue

- 35 core hours charged by jobs that need two MICs on the same host (this option is very new to Stampede)

Thus, somewhere around 100x more core-hours are used not using MICs than are using MICs. Granted, this may not be as bad as you might initially think--recall that a "core hour" defined here is an hour spent using a single core on a Xeon E5-2680. However, the fact remains that over 94% of the time charged on Stampede does not use the Xeon Phi coprocessors.

On the basis of job quantity, the results are much the same:

Between 8x and 80x more jobs did not require a MIC coprocessor than jobs that did, depending on how many development jobs were using MICs. In more certain terms, jobs that don't use MICs (normal queue) represent over 83% of all of the jobs run on Stampede. Thus, the gross disparity between the core hours charged to MIC-enabled jobs vs. pure CPU jobs is not a trick of arithmetic--users just aren't running many jobs that require MIC coprocessors. The fact that the hours charged by MIC jobs is disproportionately less than the number of MIC jobs submitted also indicates that MIC jobs, on average, aren't running very long. These sorts of patterns can be indicative of a large number of non-production jobs that either complete or fail quickly.

Over Time

Stampede was the world's first large-scale deployment of Xeon Phi, so it's very feasible that although the aggregate utilization of Xeon Phi remains low, its adoption is increasing. Is this true?

The above diagram shows the top five most utilized queues in terms of core hours charged during each month of 2013 to date. The normal and development queues (blue and teal lines, respectively) are pretty flat, and this is to be expected since these queues guarantee the basic compute functionality of any computer (CPUs and memory); any software can run on these queues' nodes.

However, there is a pretty significant growth in the number of hours consumed by MIC-enabled jobs (purple line). Noting the logarithmic y axis, the growth seen since May 2013 is superexponential, which is great news for MIC adoption. It's also quite interesting that GPU uptake on Stampede is also growing, since these GPU nodes are relatively few in number and are shared with the visualization queue. The roughly linear in growth in the above plot indicates that GPU uptake is still reflecting an impressive exponential increase in usage.

The final interesting feature of the above plot is the dramatic spike in MIC usage in April. Drilling down to the core hours charged on each day that month...

It looks like someone submitted a large number of MIC jobs to the queue (red line) in such a large quantity that non-MIC jobs (blue jobs) were starved of nodes. The more broad spike in usage starting on April 25 coincides with the "Optimize Your Code for the Intel Xeon Phi" workshop that began that day, so it looks like the excessive number of hours spent this month where due to a few special events.

Job Characterization

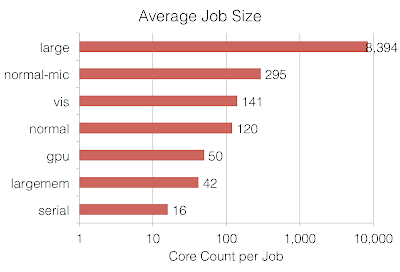

So we've established that utilization of Xeon Phi is low overall, but the uptake is growing rapidly. The next layer of detail that would be helpful to examine is what exactly users are doing with these coprocessors. Without installing probes on the compute nodes (which TACC actually does; I'm not sure if the results are publicly available though), we can get an idea of what kinds of jobs are represented in each queue by drilling down to job size:

At first glance, this is quite surprising--it appears that the average MIC job is actually larger than the average non-MIC (normal) job. As it turns out though, the difference here is due to the fact that looking at the average job size of a fundamentally non-Gaussian distribution is not meaningful. In the case of the normal queue, there is a vast number of extremely small (single-node) jobs that drags the entire average job size down to a very small value. By comparison, normal-mic jobs are far fewer in number and the distribution is less skewed, so the average size appears to be large.

However the fact remains that the average MIC job is quite large. Given that the minimum size of any job is 16 cores (hence the serial queue having an average job size of exactly 16.0), the 295-core average MIC job spans about 18 nodes. This is perplexing, as I would anticipate most people who submit MIC jobs to test a lot of small-scale, single-MIC jobs before scaling out. Drilling down to the node count distribution of jobs submitted to the normal-mic queue...

It turns out that this 18-node average job size for MIC-enabled jobs is a result of a highly non-Gaussian distribution of MIC job sizes. Over half of all MIC jobs submitted ran on a single node, but 20% of those MIC jobs used sixteen or more nodes at once. When you average all of these job sizes together, you wind up with a strange number (18 nodes) that doesn't really represent anything meaningful.

User Demographics

As mentioned above, one user is often capable of skewing the results of a workload analysis, so it's often helpful to get an idea of how many users are contributing to each data point.I was quite surprised by the above data; there are over ten times as many unique users who have submitted non-MIC jobs as users who have submitted MIC jobs. Specifically, only 138 users** have ever submitted MIC jobs on Stampede. I would have guessed that the number of us who submitted a MIC job just to play around would be much larger, but apparently I am one of just 138 curious individuals. To put this into context, there are over twice as many users who submit serial (16-core or fewer, non-MPI) jobs on Stampede than there are users who submit MIC jobs.

Looking at how many different allocations (separate scientific projects) need MICs is quite similar as well; this is due to the fact that a large number of projects only have one user who ever charges jobs. The next level of detail would be into examining how many MIC jobs each allocation is charging. Is one particular group submitting most of these MIC jobs?

There's actually a nice spread to MIC usage. The top five consumers of MIC cycles are

- 22% - Porting an earthquake simulation code to Xeon Phi

- 17% - Developing programming tools to automatically generate Xeon Phi-compatible code

- 4.9% - Incorporating Xeon Phi support in a popular application framework

- 4.4% - Calculating statistical models in environmental sciences

- 4.1% - A parallel programming class

In particular, I'm very happy to see that #1 and #4 are users in the domain sciences who are actually trying to use Xeon Phi for their intended purposes, and the framework being developed in #3 is popular in the astrophysics community. In line with the rapidly accelerating adoption trends mentioned earlier, it looks like people are putting effort into developing application support for MICs.

** It's worth pointing out that although 90% of Stampede is allocated for XSEDE usage and is reflected in XDMoD, the remaining 10% discretionary time can contribute significantly to figures such as the unique MIC user count. According to TACC, there are actually over 400 unique users who have touched the MICs, but the difference between the XDMoD number and the TACC number cannot be described using publicly available information.

The Take-Away

Despite having over 6,000 Intel Xeon Phi coprocessors, TACC's Stampede system is seeing extremely low utilization of these coprocessors. The vast, vast majority of users on Stampede are using that machine just as they would any other large-scale cluster: they want to run regular old MPI code on regular old CPUs.

This is not entirely unexpected, especially given that the MICs have not officially been in production (although note that XSEDE PIs have been incorporating MICs into their proposals). However, I suspect a lot of people have blindly accepted the marketing from Intel that Xeon Phi is a magical product since existing MPI and OpenMP codes can run on it. As this quick workload analysis shows, there is no magic being realized. Xeon Phi is not seeing widespread use yet.

On a FLOPS Basis

Of the 9.6 petaflops of performance boasted by Stampede, 7.4 of those flops (or 77%) are provided by the Xeon Phi accelerators and 2.2 are provided by the CPUs. If you take into consideration that less than 6% (on a core-hour basis) of the jobs running on Stampede use these MICs, it turns out that Stampede is grossly underutilized--somewhere close to 75% of Stampede's deliverable FLOPs are not being delivered because users' applications can't use Xeon Phi. This is close to a 25% overall utilization level for the machine, which is abysmal in the world of non-accelerated clusters.

This is not to say that TACC is doing a terrible job of getting users on their machines, or that Xeon Phi is a dead end. The uptake curve I showed indicates that the adoption rate of Xeon Phi is both outpacing NVIDIA GPUs (on Stampede! not necessarily overall in the world!) and growing at a more-than-exponential rate. Given that Stampede was the first platform for this and we would therefore expect MIC utilization to start at dead zero upon deployment, Xeon Phi adoption seems to be on a good track so far. I expect uptake to accelerate even further upon the release of systems based on socketed Knight's Landing.

On a Cost Basis

Update (10/1): A few readers, perhaps guided by insideHPC's unfortunate choice of a summary quote for this post, may walk away from this thinking that MICs are a waste of money in a production system since they will be providing cycles that most users cannot use. This is not necessarily the case though--on a cost-per-FLOPs basis, MICs (and GPGPUs) provide absurdly good value for the users who can use them, and there is some cost-to-benefit balance where the incorporation of MICs will provide substantially better capabilities to a subset of users at a cost that is proportional to that scientific gain.Stampede is a great example of this; as +Bill Barth mentioned, 9% of Stampede's overall acquisition cost was used to triple the peak deliverable FLOPS via the MICs. The potential benefit of having 3x the FLOPS, even if only a few users ever use the coprocessors, is staggeringly high given TACC's minimal investment in MIC.

In contrast, systems that are coprocessor-rich (e.g., three or four MICs per conventional compute node) may have a substantially larger fraction of the acquisition budget invested in these limited-use FLOPS. At some degree of MIC saturation, you will encounter a point at which you are spending money on MICs that could've been more productively spent on regular CPUs/nodes. The trick, of course, is in figuring out where that sweet spot is between MIC investment and scientific impact. Of course, this sweet spot is also a moving target.

Implications for Systems Design

If your campus is interested in deploying a supercomputer to satisfy an immediate need for useable cycles by a broad community of users, Xeon Phi might not be the way to go. You're apt to get an impressive number of LINPACK flops out of the machine to satisfy your funding agency, but if Stampede is an indication of overall trends among the broader computational scientific community, a significant majority of your users' jobs (80% of jobs, 95% of cycles) won't utilize the coprocessors up front. Even if adoption rates continue at faster-than-exponential rates, it's unlikely that your Xeon Phis will see significant usage during the lifetime of the machine.

Update (10/1): I think it's also important to stress that Stampede's MICs only went into production in August 2013. Given that Stampede was the first at-scale deployment of MICs yet those MICs just went into production a month ago, it follows that Xeon Phi technology is just barely ready for production in the wider HPC industry. If you are deploying MICs right now, you have to realize that you are deploying an emerging technology that is still largely untested. If a substantial part of your budget is going into building a MIC-rich system, that system will fundamentally be a testing platform, not a production resource, for the majority of its lifetime.

Ultimately, don't bet the house on Xeon Phis unless you are purposely building a testbed or you know your users have applications that will run on them. Adoption rates on Stampede are looking really good, but the level of raw utilization remains extremely low.