IOPS are dumb

"How many IOPS do you need?"

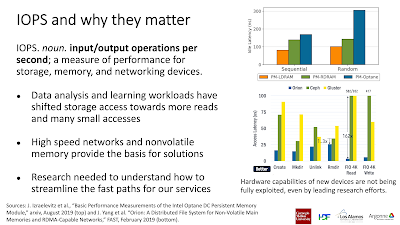

I'm often asked this by storage vendors, and the question drives me a little bonkers. I assume they ask it because their other customers bring them black-and-white IOPS requirements, but I argue that anyone would be hard-pressed to explain the scientific value of one I/O operation (versus one gigabyte) if ever called on it. And yet, IOPS are undeniably important; the illustrious Rob Ross devoted a whole slide dedicated to this at a recent ASCAC meeting:

I agree with all of Rob's bullets and yet I disagree with the title of his slide; IOPS are dumb, and yet ignoring them when designing a performance-optimized parallel file system is even more dumb in contemporary times. So let's talk about the grey area in between that creates this dichotomy.

First, bandwidth is pretty dumb

If there's one constant in HPC, it's that everyone hates I/O. And there's a good reason: it's a waste of time because every second you wait for I/O to complete is a second you aren't doing the math that led you to use a supercomputer in the first place. I/O is the time you are doing zero computing amidst a field called "high performance computing."

That said, everyone appreciates the product of I/O--data. I/O is a necessary part of preserving the results of your calculation, so nobody ever says they wish there was no I/O. Instead, infinitely fast I/O is what people want since it implies that 100% of a scientist's time using an HPC is spent actually performing computations while still preserving the results of that computation after the job has completed.

Peeling back another layer of that onion, the saved results of that computation--data--has intrinsic value. In a typical simulation or data analysis, every byte of input or output is typically the hard-earned product of a lot of work performed by a person or machine, and it follows that if you want to both save a lot of bytes but want to spend as little time as possible performing I/O, the true value of a parallel storage system's performance is in how many bytes per second it can read or write. At a fundamental level, this is why I/O performance has long been gauged in terms of megabytes per second, gigabytes per second, and now terabytes per second. To the casual observer, a file system that can deliver 100 GB/s is more valuable than a file system that can deliver only 50 GB/s assuming all things are equal for this very reason. Easy.

This singular metric of storage system "goodness" quickly breaks down once you start trying to set expectations around it though. For example, let's say your HPC job generates 21 TB of valuable data that must be stored, and it must be stored so frequently that we really can't tolerate more than 30 seconds writing that data out before we start feeling like "too much time" is being spent on I/O instead of computation. This turns out to be 700 GB/s--a rather arbitrary choice since that 30 seconds is a matter of subjectivity, but one that reflects the value of your 21 TB and the value of your time. It should follow that any file system that claims 700 GB/s of write capability should meet your requirements, and any vendor who can deliver such a system should get your business, right?

Of course not. It's no secret that obtaining those hero bandwidths, much like obtaining Linpack-level FLOPS, requires you (the end-user) to perform I/O in exactly the right way. In the case of the aforementioned 700 GB/s file system, this means

- Having each MPI process write to its own file (a single shared file will get slowed down by file system lock traffic)

- Writing 4 MiB at a time (to exactly match the size of the network transmission buffers, remote memory buffers, RAID alignment, ...)

- Using 4 processes per node (enough parallelism to drive the NIC, but not too much to choke the node)

- Using 960 nodes (enough parallelism to drive all the file system drives, but not too much to choke the servers)

I've never seen a scientific application perform this exact pattern, and consequentially, I don't expect that any scientific application has ever gotten that 700 GB/s of performance from a "700 GB/s file system" in practice. In that sense, this 700 GB/s bandwidth metric is pretty dumb since nobody actually achieves its rated performance. Of course, that hasn't prevented me from saying these same dumb things when I stump for file systems. The one saving grace of using bandwidth as a meaningful metric of I/O performance, though, is that I/O patterns are a synthetic construct and can be squished, stretched, and reshaped without affecting the underlying scientific data being transmitted.

The value of data is in its contents, not the way it is arranged or accessed. There's no intrinsic scientific reason why someone should or shouldn't read their data 4 MiB at a time as long as the bits eventually get to the CPU that will perform calculations on it in the correct order. The only reason HPC users perform nice, 1 MiB-aligned reads and writes is because they learn (either in training or on the streets) that randomly reading a few thousand bytes at a time is very slow and works against their own interests of minimizing I/O time. This contrasts sharply with the computing side of HPC where the laws of physics generally dictate the equations that must be computed, and the order in which those computations happen dictates whether the final results accurately model some physical process or just spit out a bunch of unphysical garbage results.

Because I/O patterns are not intrinsically valuable, we are free to rearrange them to best suit the strengths and weaknesses of a storage system to maximize the GB/s we can get out of it. This is the entire foundation of MPI-IO, which receives I/O patterns that are convenient for the physics being simulated and reorders them into patterns that are convenient for the storage system. So while saying a file system can deliver 700 GB/s is a bit disingenuous on an absolute scale, it does indicate what is possible if you are willing to twist your I/O pattern to exactly match the design optimum.

But IOPS are particularly dumb

IOPS are what happen when you take the value out of a value-based performance metric like bandwidth. Rather than expressing how many valuable bytes a file system can move per second, IOPS express how many arbitrary I/O operations a file system can service per second. And since the notion of an "I/O operation" is completely synthetic and can be twisted without compromising the value of the underlying data, you might already see why IOPS are a dumb metric of performance. They measure how quickly a file system can do something meaningless, where that meaningless thing (an I/O operation) is itself a function of the file system. It's like saying you can run a marathon at five steps per second--it doesn't actually indicate how long it will take you to cover the twenty six miles.

IOPS as a performance measure was relatively unknown to HPC for most of history. Until 2012, HPC storage was dominated by hard drives which which only delivered high-value performance for large, sequential reads and writes and the notion of an "IOP" was antithetical to performance. The advent of flash introduced a new dimension of performance in its ability to read and write a lot of data at discontiguous (or even random) positions within files or across entire file systems. Make no mistake: you still read and write more bytes per second (i.e., get more value) from flash with a contiguous I/O pattern. Flash just raised the bottom end of performance in the event that you are unable or unwilling to contort your application to perform I/O in a way that is convenient for your storage media.

To that end, when a vendor advertises how many IOPS they can deliver, they really are advertising how many discontiguous 4 KiB reads or writes they can deliver under the worst-case I/O pattern (fully random offsets). You can convert a vendor's IOPS performance back into a meaningful value metric simply by multiplying it by 4 KiB; for example, I've been presenting a slide that claims I measured 29,000 write IOPS and 1,400 read IOPS from a single ClusterStor E1000 OST array:

In reality, I was able to write data at 0.12 GB/s and read data at 5.7 GB/s, and stating these performance metrics as IOPS makes it clear that these data rates reflect the worst-case scenario of tiny I/Os happening at random locations rather than the best-case scenario of sequential I/Os which can happen at 27 GB/s and 41 GB/s, respectively.

Where IOPS get particularly stupid is when we try to cast them as some sort of hero number analogous to the 700 GB/s bandwidth metric discussed above. Because IOPS reflect a worst-case performance scenario, no user should ever be asking "how can I get the highest IOPS" because they'd really be asking "how can I get the best, worst-case performance?" Relatedly, trying to measure the IOPS capability of a storage system gets very convoluted because it often requires twisting your I/O pattern in such unrealistic ways that heroic effort is required to get such terrible performance. At some point, every I/O performance engineer should find themselves questioning why they are putting so much time into defeating every optimization the file system implements to avoid this worst-case scenario.

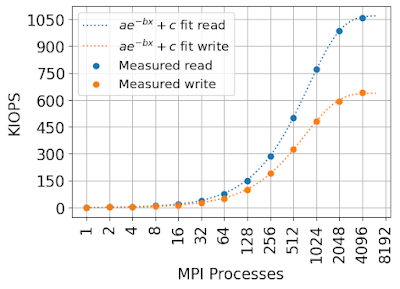

To make this a little more concrete, let's look at this slide I made in 2019 to discuss the IOPS projections of this exact same ClusterStor E1000 array:

Somehow the random read rate went from a projected 600,000 to an astonishing 1,400,000 read IOPS--which one is the correct measure of read IOPS?

It turns out that they're both correct; the huge difference in measured read IOPS are the result of the the 600 KIOPS estimate coming from a measurement that

- ran for a much longer sustained period (180 seconds vs. 69 seconds)

- used fewer client nodes (21 nodes vs. of 32 nodes)

- wrote larger files (1,008× 8 GiB files vs. 1,024× 384 GiB files)

Unlike the IOPS measurements on individual SSDs which are measured using a standard tool (fio with libaio from a single node), there is no standard method for measuring the IOPS of a parallel file system. And just as the hero bandwidth number we discussed above is unattainable by real applications, any standardized IOPS test for a parallel file system would result in a relatively meaningless number. And yes, this includes IO-500; its numbers have little quantitative value if you want to design a parallel file system the right way.

So who's to say whether a ClusterStor E1000 OST is capable of 600 kIOPS or 1,400 kIOPS? I argue that 1,400 kIOPS is more accurate since I/O is bursty and a three-minute-long burst of completely random reads is less likely than a one-minute long one on a production system. If I worked for a vendor though, I'm sure this would be taken to be a dishonest marketing number since it doesn't reflect an indefinitely sustainable level of performance. And perhaps courageously, the official Cray ClusterStor E1000 data sheet doesn't even wade into these waters and avoids quoting any kind of IOPS performance expectation. Ultimately, the true value of the random read capability is the bandwidth achievable by all of the most random workloads that will realistically be run at the same time on a file system. Good luck figuring that out.

Write IOPS are really dumb

As I said at the outset, I cannot disagree with any of the bullets in the slide Rob presented at ASCAC. That first one is particularly salient--there are a new class of HPC workloads, particularly in AI, whose primary purpose is to randomly sample large datasets to train statistical models. If these datasets are too large to fit into memory, you cannot avoid some degree of random read I/O without introducing biases into your weights. For this reason, there is legitimate need for HPC to demand high random read performance from their file systems. Casting this requirement in terms of 4 KiB random read rates to have a neat answer to the "how many IOPS do you need" question is dubious, but whatever. There's little room for intellectual purity in HPC.

The same can't be said for random write rates. Write IOPS are a completely worthless and misleading performance metric in parallel file systems.

In most cases, HPC applications approximate some aspect of the physical world, and mathematics and physics were created to describe this physical world in a structured way. Whether you're computing over atoms, meshes, or matrices, there is structure to the data you are writing out and the way your application traverses memory to write everything out. You may not write data out in a perfectly ordered way; you may have more atoms on one MPI process than another, or you may be traversing an imbalanced graph. But there is almost always enough structure to scientific data to squish it into a non-random I/O pattern using middleware like MPI-IO.

Granted, there are a few workloads where this is not true. Out-of-core sorting of short-read DNA sequences and in-place updates of telescope mosaics are two workloads that come to mind where you don't know where to write a small bit of data until you've computed on that small bit of data. In both these cases though, the files are never read and written at the same time, meaning that these random-ish writes can be cached in memory, reordered to be less random, and written out to the file asynchronously. And the effect of write-back caching on random write workloads is staggering.

To illustrate this, consider three different ways in which IOR can be run against an all-NVMe file system to measure random 4 KiB writes:

- In the naïve case, we just write 4 KiB pages at random locations within a bunch of files (one file per MPI process) and report what IOR tells us the write IOPS were at the end. This includes only the time spent in write(2) calls.

- In the case where we include fsync, we call fsync(2) at the end of all the writes and include the time it takes to return along with all the time spent in write(2).

- In the O_DIRECT case, we open the file with direct I/O to completely bypass the client write-back cache and ensure that write(2) doesn't return until the data has been written to the file system servers.

Again we ask: which one is the right value for the file system's write IOPS performance?

If we split apart the time spent in each phase of this I/O performance test, we immediately see that the naïve case is wildly deceptive:

The reason IOR reported a 2.6 million write IOPS rate is because all those random writes actually got cached in each compute node's memory, and I/O didn't actually happen until the file was closed and all cached dirty pages were flushed. At the point this happens, the cache flushing process doesn't result in random writes anymore; the client reordered all of those cached writes into large, 1 MiB network requests and converted our random write workload into a sequential write workload.

The same thing happens in the case where we include fsync; the only difference is that we're including the time required to flush caches in the denominator of our IOPS measurement. Rather frustratingly, we actually stopped issuing write(2) calls after 45 seconds, but so many writes were cached in memory during those 45 seconds that it took almost 15 minutes to reorder and write them all out during that final fsync and file close. What should've been 45 seconds of random writes to the file system turned into 45 seconds of random writes to memory and 850 seconds of sequential writes to the file system.

The O_DIRECT case is the most straightforward since we don't cache any writes, and every one of our random writes from the application turns into a random write out to the file system. This cuts our measured IOPS almost in half, but otherwise leaves no surprises when we expect to only write for 45 seconds. Of course, we wrote far fewer bytes overall in this case since the effective bytes/sec during this 45 seconds was so low.

Based on all this, it's tempting to say that the O_DIRECT case is the correct way to measure random write IOPS since it avoids write-back caches--but is it really? In the rare case where an application intentionally does random writes (e.g., out-of-core sort or in-place updates), what are the odds that two MPI processes on different nodes will try to write to the same part of the same file at the same time and therefore trigger cache flushing? Perhaps more directly, what are the odds that a scientific application would be using O_DIRECT and random writes at the same time? Only the most masochistic HPC user would ever purposely do something like this since it results in worst-case I/O performance; it doesn't take long for a user to realize this I/O pattern is terrible and reformulating their I/O pattern would increase their productive use of their supercomputer.

So if no user in their right mind does truly unbuffered random writes, what's the point in measuring it in the first place? There is none. Measuring write IOPS is dumb. Using O_DIRECT to measure random write performance is dumb, and measuring write IOPS through write-back cache, while representative of most users' actual workloads, isn't actually doing 4K random I/Os and therefore isn't even measuring IOPS.

Not all IOPS are always dumb

1. Serving up LUNs to containers and VMs

2. Measuring the effect of many users doing many things

If everything is dumb, now what?

Is the storage system running at 100% CPU even though it's not delivering full bandwidth? Servicing a small I/O requires a lot more CPU than a large I/O since there are fixed computations that have to happen on every read or write regardless of how big it is. Ask your vendor for a better file system that doesn't eat up so much CPU, or ask for more capable servers.