FASST will be DOE's opportunity to adapt, align, or...

FASST is an initiative within the US Department of Energy to build and deploy the world's most powerful AI systems for science and is shaping up to be the next big thing after the US Exascale Computing Project. With an eye towards an initial $12 billion budget, the program represents a bold vision of positioning the US government as the leader in AI for science. However, unlike exascale computing before it, FASST will also take the DOE into a field where industry--not government--is leading in terms of investments, scale, and innovation around HPC. Whereas the DOE used to lay the tracks for societally transformative technologies and dictate the direction and speed, it now finds itself having to jump on a train that is already moving. The direction is set, and the challenge of FASST will be to position the DOE amidst an industry that is rolling forward without waiting for government direction.

Recognizing this new position, the DOE recently issued an RFI on the FASST initiative with the following premise:

The Department of Energy’s Office of Critical and Emerging Technologies (CET) seeks public comment to inform how DOE and its 17 national laboratories can leverage existing assets to provide a national AI capability for the public interest.

This RFI seeks public input to inform how DOE can partner with outside institutions and leverage its assets to implement and develop the roadmap for FASST, based on the four pillars of FASST: AI-ready data; Frontier-Scale AI Computing Infrastructure and Platforms; Safe, Secure, and Trustworthy AI Models and Systems; and AI Applications; as well as considerations for workforce and FASST governance.

While I'm sure that all the usual suspects in the HPC industry will submit responses, the questions in this FASST RFI are ones in which I have strong personal opinions as well. The DOE's gradual misalignment between its HPC ambitions and the greater undercurrents of the technology industry contributed to my decision to leave my job at a DOE lab, and by coincidence, I now find myself working squarely in the AI infrastructure industry. I've spent the last year in a front-row seat to witnessing the staggering pace and scale at which the AI industry is solving many of the same infrastructure challenges that the DOE HPC community has faced for decades.

Because I still care about the DOE mission and HPC for scientific discovery, I decided to write my own personal response to this FASST RFI and publish it here out in the open. To be clear though,

- These are strictly my own opinions and have been unreviewed, unvetted, and unfiltered by anyone other than me. This includes my friends, family, cats, and employer, whose own opinions on these matters may be similar to or different from my own. Anything below that sounds like corporate shilling is coincidental, or at best, a reflection of the fact that I work at places whose values and opinions on technologies align with my own.

- I am not breaking any news in anything I write below, and I've made every attempt to provide sources for every statement I make that may raise eyebrows. Even though I probably have a little inside knowledge about the AI industry, whatever credibility it may sound like I have is the result of the fact that I just pay close attention to what's going on in the commercial AI industry. I read a ton of papers, press releases, blog posts, newsletters, and trade press because being informed is part of my day job, and I am just contextualizing those trends against where DOE HPC has been and may go in the future.

What follows are direct responses to each of the sections and questions posed in the FASST RFI.

1. Data

2. Compute

(a) How can DOE ensure FASST investments support a competitive hardware ecosystem and maintain American leadership in AI compute, including through DOE’s existing AI and high-performance-computing testbeds?

DOE must first define “American leadership in AI compute” very precisely. At present, American leadership in AI has happened in parallel to the US Exascale efforts; the race to achieve artificial general intelligence (and the AI innovation resulting from it) is being funded exclusively by private industry. For example, NVIDIA Tensor Cores first appeared in the Summit supercomputer in 2018, but the absence of this capability in Summit’s launch press release and subsequent scientific accomplishments paint a picture that, despite being the first flagship supercomputer to feature Volta GPUs, Summit had no bearing on the hardware innovation that resulted in the now-indispensable Tensor/Matrix Cores found in data center GPUs.

Directly supporting a competitive hardware ecosystem for AI compute will be a challenge for FASST. Consider that NVIDIA, which holds an overwhelming majority of the market share of AI accelerators, recently disclosed in a 10-Q filing that almost half of its quarterly revenue came from four customers who purchased in volumes that exceed the purchasing power of ASCR and NNSA programs. It follows that the hardware ecosystem is largely shaped by the needs of a few key corporations, and the DOE no longer serves as a market maker with the purchasing power to sustain competition by itself.

Thus, the DOE should acknowledge this reality and align its approach to AI technology with the needs of the AI industry to the fullest extent possible. Areas for alignment include:

- Computational approaches such as using the same model architectures, approaches to scaling jobs, and using available arithmetic logic units. Numerical approaches to solving physical problems may have to fundamentally change to realize the next generation of scientific insights from modeling and simulation.

- Orchestration and management of resources which includes using existing approaches to security, authentication, and federation. I estimate that at least 75% of the software required to realize national infrastructure like IRI already exists in commercial computing, and retrofitting or modernizing DOE supercomputers to work with that infrastructure is likely easier than reinventing a parallel infrastructure designed for the peculiar ways in which HPC approaches orchestration and management.

- Infrastructural philosophies such as optimizing more holistically across the entire AI technology value chain by co-designing hardware not only with applications, but with power, cooling, data center, real estate, energy providers, and global supply chain. National-scale infrastructure must be viewed as a holistic, national-scale optimization.

- Policy approaches that avoid the substantial oversight and lengthy reviews that precede one-time capital acquisitions and inhibit agility to adapt to rapidly changing technology needs that accompany the breakneck pace of AI innovation. This agility comes at a higher cost/performance ratio than DOE's belt-and-suspenders approach to supercomputing, but the AI industry is betting that the realized value will outweigh those costs.

(b) How can DOE improve awareness of existing allocation processes for DOE’s AI-capable supercomputers and AI testbeds for smaller companies and newer research teams? How should DOE evaluate compute resource allocation strategies for large-scale foundation-model training and/or other AI use cases?

The DOE’s ERCAP model for allocations is already aligned with the way in which private sector matches AI compute consumers with AI compute providers. When an AI startup gets its first funding round, it is often accompanied with connections to one or more GPU service providers as part of the investment since such startups’ success is contingent upon having access to reliable, high-performance computing capabilities (see examples here and here). Continuing this model through FASST is the most direct way to raise awareness amongst those small businesses and researchers who stand to benefit most from FASST resources.

Evaluating allocation strategies should follow a different model, though. Recognizing that the centroid of AI expertise in the country lies outside of the government research space, FASST allocations should leverage AI experts outside of the government research space as well. This approach will have several benefits:

- It reduces the odds of allocated resources being squandered on research projects that, while novel to the scientific research community, may have known flaws to the AI community.

- It also keeps DOE-sponsored AI research grounded to the mainstream momentum of AI research, which occurs beyond the ken of federal sponsorship.

DOE should also make the process fast because AI moves quickly. This may require DOE accepting a higher risk of failure that arises from less oversight but higher research velocity.

(d) How can DOE continue to support the development of AI hardware, algorithms, and platforms tailored for science and engineering applications in cases where the needs of those applications differ from the needs of commodity AI applications?

To the extent that scientific uses for AI diverge from industry’s uses for AI, the DOE should consider partnering with other like-minded consumers of AI technology with similarly high tolerances for risk to create a meaningful market for competition.

Collaborations like the now-defunct APEX and CORAL programs seemed like a step in this direction, and cross-agency efforts such as NAIRR also hold the potential for the government to send a unified signal to industry that there is a market for alternate technologies. If formally aligning FASST with parallel government efforts proves untenable, FASST should do all in its power to avoid contradicting those other efforts and causing destructive interference in the voice of the government to industry.

The DOE should also be very deliberate to differentiate:

- places where science and engineering applications truly diverge from industry AI applications, and

- places where science and engineering applications prefer conveniences that are not offered by hardware, algorithms, and platforms tailored for industry AI applications

This is critical because the success of FASST is incompatible with the pace of traditional scientific computing. Maintaining support for the multi-decadal legacy of traditional HPC is not a constraint carried by the AI industry, so the outdated, insecure, and inefficient use modalities around HPC resources must not make their way into the requirements of FASST investments.

As a specific example, the need for FP64 by science and engineering applications is often stated as a requirement, but investments in algorithmic innovation have shown that lower-precision data types can provide scientifically meaningful results at very high performance. Instead of a starting position of “FP64 is required” in this case, FASST investments should start from places like, “what will it take to achieve the desired outcomes using BFLOAT16?”

This aligns with the AI industry’s approach to problems; the latest model architectures and algorithms are never perfectly matched with the latest AI hardware and platforms due to the different pace at which each progress. AI model developers accept that their ideas must be made to work on the existing or near-future compute platforms, and hard work through innovation is always required to close the gaps between ambition and available tools.

How can DOE partner with other compute capability providers, including both on-premises and cloud solution providers, to support various hardware technologies and provide a portfolio of compute capabilities for its mission areas?

The DOE may choose to continue its current approach to partnership where, to a first-order approximation, it is a customer who buys goods and services from compute capability providers. In this scenario, the role of those providers is to reliably deliver those goods and services, and as a part of that, periodically perform non-recurring engineering or codesign with its customers to align their products with the needs of their customers. There is a wealth of infrastructure providers who either sell hardware platforms or provide GPUs-as-a-hosted-service who will happily operate in this familiar mode, and the cost of their capital or services will be similarly aligned with the level of AI-specific value they deliver to the DOE.

However, partnership with true AI technology providers--those who develop hardware platforms for their own AI research, development, and service offerings--will bring forth two new challenges: misalignment of mission and mismatch of pace.

Alignment of mission

The DOE Office of Science’s mission is “to deliver scientific discoveries and major scientific tools to transform our understanding of nature and advance the energy, economic, and national security of the United States.” Broadly, its mission is to benefit society.

This mission naturally maps to the mission statements of the technology companies that have traditionally partnered with DOE. For example,

- HPE does not have a clear mission statement, but their goals involve “helping you connect, protect, analyze, and act on all your data and applications wherever they live, from edge to cloud, so you can turn insights into outcomes at the speed required to thrive in today’s complex world.”

- IBM also does not have a clear mission statement, but they state they “bring together all the necessary technology and services to help our clients solve their business problems.”

- AMD’s mission is to “build great products that accelerate next-generation computing experiences.”

These technology companies’ missions are to help other companies realize their visions for the world. Partnership comes naturally, as these companies can help advance the mission of the DOE.

However, consider the mission statements of a few prominent AI companies:

- OpenAI’s mission is “to ensure that artificial general intelligence benefits all of humanity.”

- Microsoft’s mission is “to empower every person and every organization on the planet to achieve more.”

- Anthropic’s mission is “to ensure transformative AI helps people and society flourish.”

AI companies’ missions are to benefit society directly, not businesses or customers. The AI industry does not need to partner with the DOE to realize its vision, because its mission is to “do,” not “help those who are doing.”

As such, the DOE and the AI industry are on equal footing in their ambition to directly impact everyday lives. It is not self-evident why the AI industry would want to partner with DOE, so if it is the ambition of the DOE to partner with the AI industry, it is incumbent upon DOE to redefine its role and accept some aspect of being the “helper” rather than exclusively the “doer.” The DOE must answer the question: how will the DOE help its AI industry partners achieve their mission?

The tempting, cynical answer may be “revenue,” but this would only be true companies whose mission is to “help” (and sell), not “do.” The following was stated on Microsoft’s Q1 FY2025 earnings call by Microsoft CEO Satya Nadella:

One of the things that may not be as evident is that we are not actually selling raw GPUs for other people to train. In fact, that’s a business we turn away, because we have so much demand on inference…

The motives of the AI industry are to solve problems through inferencing using world-class models. Selling AI infrastructure is not a motive, nor is training AI models. AI infrastructure and training frontier models are simply prerequisites to achieving their stated goals. Thus, the DOE should not treat these AI industry partners as vendors, nor should it expect the AI industry to react to traditional partnerships (capital acquisitions, non-recurring engineering contracts) with the same level of enthusiasm as HPC vendors. It must rethink the definition of partnership.

Mismatch of priorities

Once the DOE and its AI industry partners have found common foundations for productive partnership, the DOE must be ready to move with the same agility and urgency as its partners. Historically, the DOE has not done this; for example, while the step from hundreds of petaflops (Summit) to exaflops (Frontier) was accomplish in four years at OLCF, Microsoft accomplished the same in less than two. Similarly, xAI and NVIDIA were able to deploy an exascale supercomputer, from first rack to first job, in less than three weeks. This is not to say the DOE is incapable of moving with this speed; rather, it is a reflection of differing priorities.

For example, keeping pace with the AI industry will require DOE to accept the compromises that, in the context of scientific computing, were not justifiable. The Frontier RFP was issued in 2018, and the system first appeared on Top500 in June 2022. Consider: what would it have taken to cut this time in half by imposing a 2020 deployment deadline? What additional science could have been accomplished, albeit at reduced scale, had Frontier been up and running for the two years between 2020 and 2022?

Alternatively, what would Frontier look like if the procurement didn't begin until 2020 with a 2022 deployment date? What risks and change-order requests would have been avoided had Frontier been based on more well-defined, near-term technologies on vendor roadmaps?

Ultimately, what advantages did those extra two years of procurement lead-time offer, and were the outcomes afforded by those two years worth the opportunity cost? Would those advantages still be present in the world of AI, where major technology decisions are being made much closer to delivery?

Another example of this mismatch is in the productive outputs of DOE's stakeholders when compared to the AI industry. Many DOE researchers' careers are graded based on metrics such as papers published and grants received, and this incentivizes DOE to spend time writing, serving on review panels, creating quad charts, and focusing on other tasks that either generate these outputs or help others generate outputs. The outcomes of these outputs, frankly, are less clear--how many times do these outputs get used outside of the "Related Work" sections of other papers? By comparison, AI practitioners in industry are incentivized to contribute to products that find use; writing a paper is rarely as valuable as shipping a useful feature, and there is a much higher ratio of heads-down-engineers to principal-investigator-like managers.

This is not to say that one way has more societal value or impact than the other, but it does pose significant friction when those two worlds come together in partnership. A DOE engineer pursuing a novel idea with the goal of authoring a paper will struggle to get the support of an industry AI engineer facing a customer deadline. In the HPC context, the DOE engineer would be the customer, so the partnership (and the monetary exchange underlying the partnership) provided equal incentive for both parties. However, as stated above, partnering with the AI industry will not necessarily be the same customer-supplier relationship, and the DOE will have to find ways in which it can align its constituents' values with those of their industry partners. There are ways in which this could work (for example, DOE ideates and industry productizes, or industry ideates and DOE publishes), but the implications on intellectual property, risks, costs, and other non-technical factors will not fit the same mold as traditional partnerships.

There are many more examples of this mismatch, and as with other aspects of public-private partnership, there may be valid reasons for DOE to continue doing what it has been doing. However, it is critical that DOE not assume that the "DOE way" is the right way when approaching AI. The discussion within DOE should start from a perspective that assumes that the "AI way" is the right way. What would have to change about the way DOE defines its mission and the way scientists use DOE resources to adopt? The "DOE way" may truly offer the highest overall value even in the context of AI for science, but it is incumbent upon the DOE to prove that (to the rigor of the AI industry) if it intends to enter partnership with a "DOE-way" position.

3. Models

(b) How can DOE support investment and innovation in energy efficient AI model architectures and deployment, including potentially through prize-based competitions?

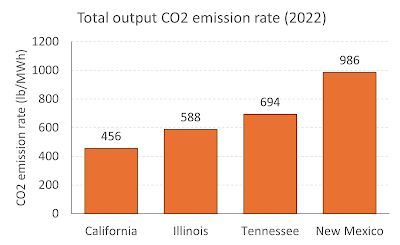

While energy-efficient technologies are undoubtedly important, the DOE's laser focus on energy efficiency (and downstream metrics such as PUE) reflects an unsettling blindness to the real issue at hand--carbon emissions, not energy consumption, drive global climate change. For example, a 20 MW supercomputer sited in California may have the same carbon footprint as...

- a 16 MW supercomputer in Illinois

- a 13 MW supercomputer in Tennessee

- a 9 MW supercomputer in New Mexico

according to a rough approximation from the 2022 eGRID data.

Really addressing the underlying environmental challenges of AI will require DOE to accept that developing the most energy-efficient algorithms and hardware in the world is a half-measure as long as it keeps housing its supercomputers in parts of the country that still rely on coal and natural gas for over half of their energy fuel mix.By contrast, leading AI infrastructure providers all have aggressive climate goals:

- Amazon aims to reach net-zero carbon emissions by 2040

- Google aims to reach net-zero carbon emissions by 2030

- Meta aims to reach net-zero carbon emissions by 2030

- Microsoft aims to be carbon negative by 2030

Furthermore, these companies are not limiting these ambitions to the carbon footprint of their direct operations and the energy their datacenters consume (Scope 2 emissions). Rather, their goals include managing Scope 3 emissions, which include carbon emissions from shipping, employee travel, waste streams like packaging, and everything else that touches their business.

DOE's focus on energy-efficient AI model architectures and deployment seems grossly insufficient by comparison. If DOE is to take seriously the environmental impacts of AI, it must look beyond nudging algorithms and silicon since the AI industry is already pursuing goals that are significantly more aggressive and comprehensive than the DOE. Instead, it should look towards partnerships that allow it to leverage the investments made by the AI industry in achieving these net-zero or net-negative emissions goals. This may include divorcing itself from deploying supercomputers in specific congressional districts, moving beyond simplistic metrics such as PUE, and aligning itself with commercial partners with broad sustainability strategies that address Scope 3 emissions.

4. Applications

(b) How can DOE ensure foundation AI models are effectively developed to realize breakthrough applications, in partnership with industry, academia, and other agencies?

The public discourse from leading experts within the DOE often conflates "foundation models for science" with the foundation models that power leading AI applications such as GPT-4o. Presentations discuss "trillion-parameter" "foundation models specifically optimized for science" on one slide, then cite papers that present "foundation models for science" to justify the success. However, these promising "foundation models for science" do not have anywhere near trillions of parameters; rather, they have a few billion of parameters and can be trained in just a couple hours. As such, it is unclear what "foundation AI models" mean to DOE. The DOE must clarify whether it wants to train trillion-parameter models, or if it wants to train foundation models for science. The two have little overlap.

If the DOE wishes to define foundation models to be N-trillion-parameter models, it effectively does not have to do anything new to ensure that they are effectively developed to realize breakthrough applications. Although purpose-trained or purpose-fine-tuned LLMs can produce better results than non-fine-tuned models of equivalent size, LLM scaling laws have been consistently upheld and shown that a fine-tuned, domain-specific LLM is simply not as good as a larger, non-domain-specific LLM.

DOE's focus should not be on training or fine-tuning N-trillion-parameter models, as this is exceptionally expensive, and industry is already doing this at scales that surpass DOE's current or future capabilities. For example, Meta's Llama-3.1 405-billion-parameter model took 54 days of computation to train on an exascale (~950 FP64 PFLOPS estimated) supercomputer. To train this same model on Frontier would have taken, at minimum, anywhere from three to six months of uninterrupted, full-system computation. The opportunity cost (all the scientific workloads that would not run during this time) is staggering, and pursuing a trillion-parameter model would double this at minimum.

If the DOE wishes to define foundation models to be much smaller, domain-specific models, its strongest assets are in the scientific data required to train such a model. A successful approach to training smaller foundation models for science may involve combining DOE's domain expertise with industry-developed frontier models to perform distillation, the process by which high-quality, synthetic data is generated to train small, efficient models. Data generation would require access to frontier models which may only be available through an industry-provided inferencing API, but training and downstream tasks can be performed on DOE computational resources at smaller scales.

These notional opportunities are contingent on DOE clarifying its ambitions though--partnerships cannot be formed without clearly aligned goals at the outset.

5. Workforce

(a) DOE has an inventory of AI workforce training programs underway through our national labs. What other partnerships or convenings could DOE host or develop to support an AI ready scientific workforce in the United States?

Brad Smith, president of Microsoft, recently published an essay titled "The Next Great GPT: Advancing Propserity in the Age of AI" that builds upon a book, "Technology and the Rise of Great Powers," by Jeffrey Ding and describes the critical steps in which transformative technologies pervade society. Although not the goal of the essay, it paints a compelling picture of what is required to establish an AI-ready scientific workforce, and the DOE is uniquely positioned to lead many of those efforts.

Brad's essay states that "an advanced skilling infrastructure is indispensable in expanding the professions that create applications that make broad use of new technologies," and cites the example of ironworking in the 18th century, which benefitted England disproportionately due to its adoption of trade societies and other opportunities for hands-on learning. He further advocates for national AI skilling strategies that are designed not only to train engineers and system designers but also to enhance AI literacy throughout the entire economy. Bridging the gap between deep technical topics and the general public is something that DOE is uniquely experienced with over the course of its long history at the frontier of particle physics, nuclear technology, cosmology, and similarly difficult-to-understand technical areas, and increasing AI fluency is a mutual interest where industry and DOE have complementary strengths.

Brad also highlights that "new technology never becomes truly important unless people want to use it," and a major barrier to this is trust and safety. Citing the example of Edison and Westinghouse competing to show how safe their visions of electrification were, he rightly points out that a prerequisite to an AI-ready workforce is assurance that AI is aligned with societal and ethical values. While it is incumbent upon the AI industry to establish and maintain public confidence, the DOE has broader experience in maintaining public trust in dual-use technologies such as nuclear fission across multiple decades, demographics, and administrations. As such, public-private partnership towards safe and trustworthy AI that is aligned with the interests of the public is a key area of promise.

More tactically, the DOE can leverage its global reputation and extensive network to establish AI talent exchange programs, mirroring its successful collaborations in the HPC field with European and Asian counterparts. AI, like other Big Science domains, is a high-stakes technology being aggressively pursued by leading nations for its transformative societal and economic benefits. Attracting top talent from around the world will be critical to maintaining a globally competitive, AI-ready workforce in the United States.

6. Governance

(a) How can DOE effectively engage and partner with industry and civil society? What are convenings, organizational structures, and engagement mechanisms that DOE should consider for FASST?

This has been described in Section 2(a). DOE must find the ways in which it can keep pace with the AI industry, recognizing that the AI industry does not limit itself with months-long FOAs and years-long procurement cycles. Industry also does not gauge progress based on traditional peer review in conferences and journals (as evidenced by the number of foundational AI papers only published on arxiv). Partnerships with industry will require finding the areas where the interests, pace, and values of DOE and the AI industry overlap, and the DOE must acknowledge that this overlap may be small.

(b) What role should public-private partnerships play in FASST? What problems or topics should be the focus of these partnerships?

FASST must establish vehicles for public-private partnerships that go beyond the conventional large-scale system procurements and large-scale NRE projects. Both of these vehicles reinforce a customer-supplier relationship where money is exchanged for goods and services, but the new reality is that the AI industry is least constrained by money. FASST must provide incentives that outweigh the opportunity cost that partners face when dedicating resources to DOE and its mission instead of the commercial AI industry, and the promise of low-margin large-scale capital acquisitions is simply not sufficient.

To put this in concrete terms, consider the dilemma faced by individuals in industry who are conversant in both DOE HPC and AI: is their time better spent writing a response to a government RFI such as this one, or is it better spent developing insight that will improve the overall reliability of the next flagship training cluster?

Realistically, investing time in the government may slightly increase the chances of being awarded a major HPC system procurement, but that business would have

- High personnel cost due to the dozens of pages of technical requirements that touch on dozens of different competencies and experts

- High risk due to the need to price and bid on technologies that are beyond any credible hardware roadmap

- Weak value proposition due to the value of the procurement being heavily biased towards delivering commoditized hardware rather than reliability, agility, and speed of deployment

By comparison, a similarly sized opportunity could surface with a twenty-person AI startup that offers a much lower opportunity cost:

- Low personnel cost due to the general requirement of a specific AI training capability (X exaflops and Y petabytes of storage to train a model of Z capability) with an understanding that the solution will work by the delivery deadline, or the customer can simply walk away and go to another provider

- Moderate risk due to the delivery date aligning with current- or next-gen (not next-next-gen) hardware which is already well-defined and in development (or already in production and hardened)

- Strong value proposition because the procurement values a rapid deployment (weeks, not years), the agility to move on to the next-generation processor after a year, and the reliability required to run a full-system job for weeks, not hours.

When confronted with this choice, the right answer is usually to prioritize the latter opportunity because it aligns most closely with the values and competencies of the AI industry.

Extending the above example to the stated question, the traditional NRE- or Fast/Design/PathForward public-private partnerships pursued by DOE HPC may not be the best approach for engaging the AI industry. The level of funding is relatively modest but is accompanied by a relatively high burden of oversight, reporting, paper-writing, and wrangling over the specifics of intellectual property agreements. Industry has made incredible advancements in large-scale AI R&D, backed by billions of dollars in private investment. In comparison, accepting tens of millions of dollars in government funding for similar R&D may not offer a meaningful return. The opportunity cost of using these researchers for government projects could outweigh the benefits, especially when they could be applied to self-funded R&D instead.

Admittedly, these are all challenges that DOE may face when approaching public-private partnerships, and these challenges do not have obvious solutions. The interests of public and private sectors in AI have considerable overlap, so there should be plenty of areas of mutual interest in developing AI for science to benefit society. However, the first hurdle to overcome will be acknowledging that the mechanical details of such partnerships will not fit the molds of prior programs, and a complete re-thinking of the DOE's approach may be required.