GTC 2025 recap

I attended GTC for the first time this year and got to experience what turned out to be one of the strangest, over-the-top conferences I've ever attended. It took many typical conference activities (technical talks, an exhibit floor, after-hours parties) and mashed them together with some distinctively unusual conference elements like a keynote in a sports arena, a night market, and a live music stage running all week.

Though it felt like a regular tech conference at its surface, it didn't take me long to realized that the program was crafted to subtly spoon-feed attendees with messages and ideas that NVIDIA wanted them to hear. Jensen's keynote outlined what everyone should talk about for the rest of the week, and most of the technical sessions all built off of things from the keynote in a way that make them seem like genuine industry trends to the casual observer. Things that NVIDIA didn't want attendees thinking about just didn't make the program, and all the usual opportunities for peers to compare notes in a structured way (like birds-of-a-feather sessions) did not exist.

With that being said, I think NVIDIA did an effective job in landing the following messages:

- Everyone should be talking about inferencing now. Success in AI is measured in tokens per second, and everything that improves that metric is worth showcasing. I was surprised by how much key-value caching (which accelerates inferencing...) came up; people usually don't talk about storage, but storage (in the context of KV caches) came up in many of the big sessions.

- Reasoning models will be the future, and they will disrupt all earlier GPU demand projections. Compared to "one-shot" models like GPT-4o, reasoning models process so many more tokens during inferencing. They also shift the computational burden of training from pre-training to fine-tuning, because reinforcement learning (used to teach a pre-trained, one-shot model to reason) requires a ridiculous amount of training tokens. So in addition to the giant supercomputers needed for pre-training, we'll all need more GPUs for fine-tuning and for serving models too.

- Get ready for ridiculous power demands. NVIDIA is preparing for 600 kW racks in the 2027 timeframe, and they had one on display to prove they weren't crazy. The more power you can put into a rack (or many GPUs you can put within the maximum reach of copper NVLink cables), the more efficiently you can turn kilowatts into tokens.

- NVIDIA still cares about science. I was surprised by how much attention was given to scientific advancements brought about by the latest GPUs. AI was still front and center of most things, but headliners like Jensen and Ian Buck went out of their way to lead with science stories.

In addition to the NVIDIA-led themes, I noticed a few trends that weren't part of the official narrative:

- NVIDIA is actually lagging when it comes to using GPUs at scale. Many of the talks I attended presented techniques for training and inferencing at scale that are actually a year or more behind the state of the art. Of course, this shouldn't be too surprising since NVIDIA isn't a model training company, but I found that some speakers were presenting "new" ideas that have actually been used (albeit quietly) for a long time now. It's great that NVIDIA is now implementing them in open-source frameworks for the masses to use, but a lot of the content at GTC was less about novelty and more about accessibility.

- The GPU market is stratifying into hyperscale AI and everyone else. NVIDIA flooded the exhibit hall with GB300 hardware as if GB200 was old hat, and the splashiest technical sessions shared learnings from using Blackwell. Talks by smaller AI companies and GPUaaS providers focused on H100 and H200 though, and talks by scientists still leaned heavily on A100. This suggested that it may be a year before Blackwell winds up in the hands of the broader AI market, and even longer before scientific applications are using it.

I'll elaborate on all of these (and more!) below, and I've broken my notes up into the following sections:

Jensen's Keynote

I started the conference by waiting in a half-mile line for an hour to see the Jensen keynote in the 19,000-seat SAP Center on Tuesday morning.

The whole conference seems to hinge on the keynote, and it was clear that NVIDIA uses it to frame the topics attendees should be talking about for the rest of the week. Jensen monologued for two hours without a teleprompter, often talking so quickly and with such confidence that it was easy to accept his conclusions without realizing that some of them were based on shaky foundations. This was most pronounced in his elaborate explanation of how Blackwell-based “AI factories” would make us all billions of dollars.

Blackwell will print money

The first ninety minutes of the keynote took us on a journey that ended with inferencing on GB200 NVL72 at the center of the universe. I don't think training on GB200 came up once; instead, Jensen told a story, complete with fancy visual aids and semi-quantitative plots, to hammer home the idea that tokens equal revenue, and by extension, the latest NVIDIA GPUs will let us all just print money if we buy enough of them.

This journey started with Jensen's assertion that we should all prepare for a 100x increase in token generation rates over what we expected prior to reasoning models like OpenAI o1 and DeepSeek-R1. By his logic, this 100x increase is the product of two factors:

- Reasoning models, which are the future, require 10x more tokens to be generated per inferencing request. These tokens are generated as the model talks to itself during the reasoning process.

- People will expect responses as quickly as they come now, which will require 10x lower latency per token to reach the same end-to-end response time they are accustomed to in today's one-shot (non-reasoning) models.

These 10x factors are completely made up, of course, but this premise paves the way for needing "AI factories" which "manufacture" tokens en masse. He predicted that we will reach a trillion dollars being spent in annual datacenter buildout by 2028, and the majority of this will go towards GPUs. But don't worry about who's paying for all of that! Jensen went on to show a few of these wacky "AI factory pareto" plots to help us visualize how much money we could make by spending a trillion dollars on NVIDIA GPUs:

The y axis is meant to represent the total token generation rate normalized to a power budget, and the x axis represents how "smart" the AI is. Jensen asserted that the area under the plot--the product of token generation per megawatt and "AI smartness"--is the total opportunity to make piles of money.

He tried doing some mental math on stage on the basis that OpenAI charges $10 per million tokens for ChatGPT to illustrate this, but his numbers came out all wrong as best I can tell. What I think he meant to say is that a megawatt worth of Hopper (at 2 million tokens per second per megawatt) can crank out over $500M in gross revenue per year, but a megawatt of GB200 NVL72 (at 9.2 million tokens per second per megawatt at the same x value, or "smartness") can do well over $2.5 billion.

Although his math around the revenue opportunity didn't actually add up, I think the qualitative conclusion they were meant to demonstrate still landed: everyone should just throw away their HGX H100 servers and replace them with GB200 NVL72. Since Blackwell generates so many more tokens per megawatt in inferencing, they can convert megawatts to megabucks so much better than Hopper. And Jensen said as much during his keynote; he literally said, "when Blackwell starts shipping in volume, you couldn't give Hoppers away." Bad news for all the buyers with hundreds of millions in outstanding orders of H200.

The big problem I see with this plot is that it says nothing about the total price of all this GPU infrastructure. In fact, it seems to suggest that NVIDIA would be justified in pricing GB200 NVL72 significantly higher than H100 since its value (as measured by its ability to crank out $10 per million tokens) is so much higher. His slides claimed that GB200 NVL72 has a 25x advantage in single-shot inferencing (GPT-4o) and 40x advantage in reasoning models (o1/o3/DeepSeek-R1) over H100. Again, the math here didn't quite add up as best I could tell; the 25x-40x benefits reflects some goofy metric of tokens-per-second-squared-per-user-megawatt and overstates the throughput improvement by a power of two.

But never mind that! The next slide's impressive visuals of infinite GPUs drove the point home without the audience having to understand NVIDIA's math:

Ultimately, if a 100 MW "AI factory" built on GB200 NVL72 can generate "40X more token revenue" than an equivalent 100 MW datacenter full of H100, a bunch of wild conclusions may follow:

- NVIDIA would be justified in charging up to 40x more per B200 GPU since they can make you so much more money per megawatt. Paying 10x more per GPU might be a bargain!

- It is worth it to throw away your H100 GPUs and use that power on GB200 NVL72 GPUs even though your H100s are less than two years old, because GB200 is so much better at converting megawatts into money.

- Investors can expect order-of-magnitude growth in revenue since NVIDIA customers have a strong reason to upgrade every year instead of the typical 5-year depreciation life of a GPU server and pay significantly more.

- A 100 MW "AI factory" would generate over $275 billion in gross revenue in one year if we follow Jensen's example of $10 per million tokens for GPT-4o. Why wouldn't we build a trillion dollars worth of datacenters by 2028 with that kind of opportunity?

Jensen went so far as to say that the old "the more you buy, the more you save" is now "the more you buy, the more you make." By this logic, the latest NVIDIA GPUs will just print money by converting megawatts into tokens.

This messaging, and the fast, confusing ride through the plots that Jensen used to get to these conclusions, were clearly aimed at investors rather than engineers. Investors may not be as quick to stop and wonder where all this demand for tokens are actually coming from, and they may also be much more willing to overlook the fact that nobody in the generative AI game is actually making any net profit off of inferencing yet--except the shovel-sellers like NVIDIA.

What was interesting

The long discussion around how much revenue Blackwell will generate did contain a few technically interesting points that I didn't fully appreciate until I went over my notes.

Dynamo

Foremost, Jensen announced Dynamo, an open-source "operating system for the AI factory"--again, a catchy brand name that encapsulates a complex set of orchestration optimizations that the casual observer may not understand. At its core, Dynamo capitalizes on the fact that there are two phases to inferencing LLMs with different computational requirements:

- Prefill: This happens every time you submit a new query to an LLM and requires running the entire input query and context through the entire model. This is compute-bound and benefits from being distributed over many GPUs. At the end of prefill, you get the first output token from the LLM. You can also save the key and value vectors that were computed for each layer of the model in a KV cache.

- Decode: This happens repeatedly as the response to the query is built out from the first output token. Decode involves loading a bunch of cached key and value vectors from HBM over and over and doing a little bit of computation based on the previously emitted output token, so it's memory bandwidth-bound. At the end of decode, you have the full response to your query.

The best way to distribute a model across GPUs is different for prefill and decode as a result of their different bottlenecks. For prefill, it's best to spread the model out over many GPUs to get lots of FLOPS, but for decode, it's best to spread the model over only a few GPUs to maximize cache hit rate from the KV cache.

The magic of Dynamo is that it will maintain pools of GPUs configured in both ways on all the GPUs in an NVLink domain, and it will dispatch prefill tasks to the prefill-optimized pools and decode to the decode-optimized pools. As the inferencing workload mix varies, Dynamo will also slosh GPUs between being prefill-optimized and decode-optimized, allowing both phases of inferencing to run optimally across a single rack of NVL72-connected GB200s.

If none of this sounds familiar, don't feel too bad--I didn't understand inferencing this deeply at the start of GTC either. One of my big personal takeaways from the conference is that I need to understand inferencing a lot better than I thought. However, the various speakers at GTC did a good job of explaining the sophistication with which Dynamo can map parts of tasks to GPUs.

Reconfigurable infrastructure

Jensen also showed an AI factory pareto plot for a reasoning model and highlighted that achieving the optimal balance of aggregate throughput (y axis) for a given user experience (x axis) requires parallelizing the reasoning model across GPUs in a huge variety of ways:

The plot shows that the optimal throughput for short answers (lower x values) is achieved using expert parallelism (EP) varying between 8 GPUs and 64 GPUs but keeping both prefill and decode stages on the same nodes (disagg off). If answers get very long (higher x value) and there isn't much in the conversation yet (low % context), optimal GPU utilization requires a different parallelization strategy such as combining tensor parallelism (TEP) and expert parallelism (EP). Changing how a model is distributed across GPUs on the fly is much easier with a larger NVLink domain in the same way it's easier to pack oddly shaped items into a big suitcase than a small one.

Jensen called this "reconfigurable infrastructure," and I suppose it is since it relies on NVLink's dynamic repartitioning capability. Whatever you choose to brand it though, having a large, high-bandwidth communication domain provides more opportunities to efficiently use all of the GPUs in it, and bigger NVLink domains makes for more efficient overall GPU utilization when you have a smart orchestrator like Dynamo to continually remap and balance evolving workloads.

Technology announcements

After the lengthy explanation of how GB200 NVL72 is a goose that lays golden eggs, Jensen tried to cram a bunch of other topics into the final thirty minutes. Two in particular stood out to me:

- Hardware innovations and roadmaps, including information about Blackwell Ultra, Vera-Rubin, Rubin Ultra, and the 600 kW Rubin Ultra rack. Jensen also raced through his announcement of what may be the first mass-market switches with copackaged optics, Quantum-X Photonics and Spectrum-X Photonics. I'll talk about these in a separate section below.

- Physical AI and robotics, including a demo of Blue, a robot that was powered by a new physics engine called Newton that is being developed by DeepMind, Disney Research, and NVIDIA. Though cute, it wasn't at all clear what the robot was actually doing autonomously (if anything). However, Jensen proclaimed that "physical AI" and robotics will be the next big thing that follows the burgeoning field of agentic AI. Given that robotics were prominent in both the start and end of the keynote, I expect this is a big bet that NVIDIA is making despite the superficial attention it was given throughout the presentation.

A few Blackwell-based developer platforms were also announced including:

- DGX Spark, the productized version of the DIGITS project announced at CES in January. It is a $3,000 miniature computer featuring a 20-core Mediatek CPU (10x Cortex-X925 and 10x Cortex-A725), minified Blackwell GPU (capable of 1 PF FP4 with 2:4 sparsity), and 128 GB of LPDDR5X.

- DGX Station, a Blackwell refresh of NVIDIA's developer desktop with a full-blown Grace CPU and Blackwell Ultra GPU with 288 GB of HBM3e. No indication of pricing, but there's no way a full superchip is going to be affordable to prosumers.

A few open-source models were also released:

- NVIDIA Llama Nemotron Reasoning Models, continuing the tradition of terribly named LLMs. The Super variant is a 49-billion parameter reasoning model that is fine-tuned for instruction in enterprise environments. Not sure exactly what that means though.

- NVIDIA Isaac GR00T N1, a 2.2-billion parameter multimodal, reasoning-capable model specifically for human robotics. It accepts image, text, robot state, and action tokens, then outputs motor action tokens.

New hardware!

As someone most drawn to big infrastructure, I enjoyed the hardware technologies that debuted at GTC this year the best. Jensen talked about three future generations of GPUs (Blackwell Ultra, Vera Rubin, and Vera Rubin Ultra) and announced a new silicon photonics switch platform (Spectrum-X Photonics and Quantum-X Photonics) during his keynote, and the exhibition floor was full of interesting first- and third-party hardware technologies.

GB300 on display

Now that B200 is "in full production," NVIDIA made sure that everyone was confident that the Blackwell Ultra (B300) would be following on later this year. Scores of booths at the expo had identical GB300 boards from NVIDIA alongside their GB200 boards, and my employer's booth was no exception:

From top to bottom, the salient features shown are:

- Four NVLink connector assemblies (shown with orange caps)

- Four B300 GPUs, each with two reticle-limited dies

- Two Grace CPUs

- Four ConnectX-8 NICs (surrounded by copper plates)

Compared to GB200, there are a couple changes:

- All four GPU packages fit on a single baseboard, whereas GB200 used two discrete 1C:2G boards. According to a talk Ian Buck gave, putting everything on a single board allows for better power distribution.

- Everything is now socketed using LGA, meaning that one bad GPU won't require you to throw out the entire board and all the good CPUs and GPUs on it. This is a big improvement over GB200, where two GPUs and one CPU were all soldered to one board.

- The NICs are now onboard and upgrade from 400G ConnectX-7 to 800G ConnectX-8.

- The platform is intended to be 100% liquid cooled, whereas GB200 was around 20% air-cooled.

The integrated server sleds look pretty similar to the GB200 with the notable difference being that it is now 100% liquid-cooled:

The above photo shows HPE's implementation, but all the other manufacturers' designs looked very similar since they're all minor twists on NVIDIA's reference platform. You can see the liquid manifold in the center of the sled; this takes the place of a row of fans in GB200.

These GB300 servers are similar enough to GB200 that they're compatible with the GB200 rack infrastructure. NVIDIA had both DGX GB200 (their NVL72 rack) and DGX GB300 on display, and they look identical:

Despite the power and cooling being identical between rack-scale GB200 and GB300, GB300 provides a 50% increase in FP4 performance and HBM capacity over GB200 at iso-power. This is a result of B300 using new silicon rather than just shrinking B200.

Vera Rubin and beyond

NVIDIA also announced more details about the upcoming Vera Rubin and Vera Rubin Ultra platforms, due out in 2H2026 and 2H2027, respectively.

The new Vera CPU was described in a talk by Ian Buck, where he claimed that the choice to have relatively low core count (88) compared to competitors (e.g., 192-core AMD Turin) was the result of dedicating more floorspace to a robust on-chip network. Those 88 cores will be a custom NVIDIA design, not Arm-standard Neoverse V-series cores as in Grace, and will support two threads per core. He also said that it'll only take around twelve threads to saturate memory bandwidth, and both LPDDR and DDR will be supported.

The Rubin GPU didn't get a lot of attention by comparison. It'll be faster (3.3x more FP4/FP8 performance than B300), but its power and cooling requirements were not discussed. It'll have the same 72-GPU NVLink domain size as Blackwell, but curiously, NVIDIA chose to redefine the "NVL##" nomenclature for Rubin: 72-GPU, rack-scale Vera Rubin will be branded as NVL144 to reflect that each GPU package has two reticle-limited GPU dies. Thus, while B200, B300, and Rubin will all have two reticle-limited dies per package and fit 72 such packages in a single NVLink domain, Blackwell is branded NVL72 while Rubin will be NVL144.

Rubin Ultra was the most interesting announcement from an infrastructure standpoint. Compared to Rubin, Rubin Ultra will:

- Move from 2 GPUs per package to 4 GPUs per package

- Move from a domain size of 144 GPUs (72 packages) up to 576 GPUs (144 packages) per NVLink domain

- Move to a 600 kW rack(!)

Interestingly, the advertised FP4 performance for a single Rubin Ultra die appears to be the same as a Rubin die, and I think I've correctly summarized the normalized specifications below:

| GPU | Reticle-limited dies | Dense FP4 per die | HBM per die | Dies per NVL | Release |

|---|---|---|---|---|---|

| B200 | 2 | 5 | 96 HBM3 | 144 | 2H24 |

| B300 | 2 | 7.5 | 144 HBM3e | 144 | 2H25 |

| Rubin | 2 | 25 | 144 HBM4 | 144 | 2H26 |

| Rubin Ultra | 4 | 25 | 256 HBM4e | 576 | 2H27 |

The claim of a 600 kW rack is what caught the most attention in HPC circles, as the prospect of a standard 21" OCP rack pulling that much power and generating that much heat seems absurd. However, NVIDIA had a proof of concept of this 600 kW rack--code-named Kyber--on display at the conference, and I'll write more about that below.

Finally, Jensen quickly mentioned that the GPU to follow Rubin would be named Feynman, and he flashed this slide up to show the NVIDIA roadmap's progression over the next three years:

The choice to name a GPU after Richard Feynman surprised me, given Feynman's documented misogyny and allegations of physical abuse against women. I know of at least one supercomputer center which will never name a supercomputer after Feynman for this reason, and with so many noteworthy scientists whose legacies aren't as blemished, this choice seems unnecessarily problematic. That said, NVIDIA's own record here is not without issue, so perhaps this decision reflects their relative prioritization of scientific celebrity over social responsibility. But if I was a descendent of Vera Rubin, I'd be furious that there would be a product called "Vera Feynman" on the roadmap.

High-power racks

The Rubin Ultra NVL576 rack's claims of 600 kW generated a lot of buzz amongst us infrastructure folks at GTC since that seems like an impossibly large amount of power and cooling required for such a small space. Cray EX4000 probably sets the standard for high power density HPC racks, but even those can only support up to 400 kW, and they are about twice as wide as a standard OCP (ORv2) rack. Thus, this NVIDIA NVL576 rack, code-named Kyber, would have 3x the power density of today's leading racks. As ridiculous as this seems, there were a couple of demonstrations on the exhibition floor that suggested how can be achieved.

NVIDIA's 600 kW Kyber

The most notable was a mockup of an NVIDIA Kyber rack, complete with compute and NVLink Switch blades on display. At a glance, each Kyber rack has four compute chassis, each with

- Eighteen front-facing, vertically mounted compute blades. Each blade supports "up to" sixteen GPUs and two CPUs each. I presume a "GPU" is a reticle-limited piece of silicon here.

- Six (or eight?) rear-facing, vertically mounted NVLink Switch blades. All GPUs are connect to each other via a nonblocking NVLink fabric.

- A passive midplane that "eliminates two miles of copper cabling."

Here is a photo of the rear of a Kyber rack, showing all four chassis:

Examining one of those chassis closely, you can see two groups of four blades:

Six of these blades are identical NVLink Switch blades, but it wasn't clear what the other two were. It looks like the odd two blades have SFP+ ports, so my guess is that those are rack controller or management blades.

The front of the Kyber rack shows the row of eighteen compute blades in each chassis:

Given there are four chassis per rack, this adds up to 72 compute blades per rack and NVLink domain. This means there are 8 GPUs per compute blade; given that Rubin Ultra will have four GPUs per package, it follows that each compute blade has two GPU packages. However, a placard next to this display advertised "up to 16 GPUs" per compute blade, so I'm not sure how to reconcile those two observations.

From the above photo, you can see that each blade also has front-facing ports. From the top to the bottom, there appear to be

- 4x NVMe slots, probably E3.S

- 4x OSFP cages, probably OSFP-XD for 1.6 Tb/s InfiniBand links

- 2x QSFP-DD(?) ports, probably for 800G BlueField-4 SuperNICs

- 1x RJ45 port, almost certainly for the baseboard management controller

NVIDIA also had these blades pulled out for display. They are 100% liquid-cooled and are exceptionally dense due to all the copper heat pipes needed to cool the transceivers and SSDs:

The front of the blade, with the liquid-cooled SuperNIC (top), InfiniBand transceivers (middle), and SSDs (bottom), is on the left. Four cold plates for four Rubin GPUs are on the right, and two cold plates for the Vera CPUs are to the left of them. I presume the middle is the liquid manifold, and you can see the connectors for the liquid and NVLink on the right edge of the photo.

A copper midplane replaces the gnarly cable cartridges in the current Blackwell-era Oberon racks:

This compute-facing side of the midplane has 72 connector housings (4 × 18), and each housing appears to have 152 pins (19 rows, 4 columns, and two pins per position). This adds up to a staggering 10,000 pins per midplane, per side. If my math is right, this means a single Kyber rack will have over 87,000 NVLink pins.

Although the midplane has cams to help line up blades when they're being seated, these sorts of connectors freak me out due to the potential for bent pins. Unlike Cray EX (which mounts compute blades at a right angle to switch blades to avoid needing a midplane entirely), Kyber mounts both switches and compute vertically. This undoubtedly requires some sophisticated copper routing between the front-facing and rear-facing pins of this midplane.

It's also unclear how NVLink will connect between the four chassis in each rack, but the NVLink Switch blade offers a couple clues on how this might work:

Each switch blade has six connector housings on the right; this suggests a configuration where midplanes are used to connect to both compute blades (with four housings per blade) and to straddle switch blades (with two housings per switch):

It's unlikely that the 7th generation NVLink Switches used by Vera Rubin Ultra will have 576 ports each, so Kyber probably uses a two-level tree of some form.

In addition to the NVLink fabric, there were a lot of details missing about this Kyber platform:

- A sidecar for both power and cooling was mentioned, but the concept rack had this part completely blanked out. It is unclear how many rectifiers, transformers, and pumps would be required here.

- There wasn't much room in the compute rack for anything other than the Kyber chassis; InfiniBand switches, power shelves, and management may live in another rack.

- It wasn't clear how liquid and power from the sidekick would be delivered to the compute rack, but with 600 kW, cross-rack bus bars are almost a given. I'd imagine this changes the nature of datacenter safety standards.

- Although NVLink is all done via midplane, all the InfiniBand and frontend Ethernet is still plumbed out the front of rack. This means there will be 288 InfiniBand fibers and 144 Ethernet fibers coming out the front of every rack. For multi-plane fabrics, this will almost undoubtedly require some wacky fiber trunks and extensive patch panels or optical circuit switching.

However, seeing as how this was just a concept rack, there's still a few years for NVIDIA and other manufacturers to work this stuff out. And notably, Jensen went out of his way during the keynote to say that, although NVIDIA is committed to 576-way NVLink domains for Rubin Ultra, they may change the way these 576 dies are packaged. This leaves plenty of room for them to refine this rack design.

Dell's 480 kW IR7000

Dell also had its high-power, "Open Rack v3-inspired" rack on display at the show. Although currently capable of supporting 264 kW, its design is scalable to up to 480 kW--significantly higher power per square foot than the Cray EX4000 cabinet. And unlike Kyber, this rack is already shipping in its 264 kW variant.

Curiously, the model that Dell had on display wasn't the GB200 NVL72 or GB300 NVL72 that everyone else had on display; rather, it was showing a super-dense HPC-optimized rack, packed to the brim with GB200 NVL4 nodes:

Though this floor model showed a rack with 30x XE8712 servers (120x B200 GPUs), the rack supports up to 36 servers or 144 GPUs--slight more than the ~128 B200 NVL4 GPUs that the Cray EX4000 with EX154n blades can support on a square footage basis.

The rear of the rack is where the magic is:

You can see the rack-scale liquid manifolds on the left/right edges and the 480 kW-capable bus bar down the middle. Though this demo rack had a bunch of air-cooled power shelves and interconnect switches, I presume that it is capable of supporting 100% direct liquid cooling with the appropriate nodes, switches, and power infrastructure.

What I really liked about this rack is that Dell has developed a rack-scale solution that not only supports higher density than Cray, but they've made it compatible with the needs of both traditional HPC and hyperscale AI; this rack is just as suited for these GB200 NVL4 nodes as it would be for GB200/GB300 NVL72. As a result, HPC customers buying high-power, liquid-cooled configurations built on IR7000 will gain the benefit of a rack-scale platform that's already been shaken out by deep-pocketed AI types who are buying this rack at massive scale like CoreWeave.

By comparison, HPE maintains bifurcated HPC and AI product lines: HPE customers wanting NVL72 configurations buy "NVIDIA GB200 NVL72 by HPE," while those wanting GB200 NVL4 buy Cray EX154n. There is no apparent shared DNA between the Cray EX GB200 and the "NVL72 by HPE" lines, so HPC customers on the Cray platform do not benefit from the at-scale deployment learnings of the hyperscale AI platform. The Cray EX platform is getting long in the tooth though, so I hope to see HPE follow in the footsteps of Dell and converge their HPC and AI products into a single rack-scale infrastructure.

Until that happens though, Dell seems to be way out ahead of the pack with IR7000. Although not quite at the level of Kyber's 600 kW per rack, IR7000 has enough power and cooling headroom to support both Blackwell Ultra and Rubin at densities that surpass the best that HPE Cray has to offer.

Co-packaged optics

Another major announcement from NVIDIA was its new co-packaged optics switches which will start shipping later in 2025.

Although co-packaged optics enabled by silicon photonics have been talked about for as long as I've been in HPC, the technology has never progressed beyond the lab for reasons that I don't fully understand. NVIDIA appears to have finally overcome that hurdle and had a demo unit of their upcoming Quantum-X Photonics XDR InfiniBand switch on the show floor. To appreciate what co-packaged optics will bring to the table, first have a look at the "normal" 144-port XDR800 InfiniBand switch, the QM3400:

It has 72 OSFP-XD cages, and each cage supports two 800G XDR InfiniBand connections through an optical transceiver. These transceivers are individually responsible for converting electrical signals (on the OSFP side) into optical signals (via optical fibers with MPO12 connectors) using two or four lasers, and each 800G port uses around 30 watts to power these lasers. Doing the math, this amounts to over 4 kW of power from the pluggable transceivers alone.

The Quantum-X Photonics XDR InfiniBand switch looks quite different:

Instead of 72 OSFP-XD cages, there are 144 MPO12 optical connectors directly exposed on the front of the switch in a similar 18x4 grid. Instead of 72 OSFP-XD transceivers that individually convert between electrical and optical signals, there are 18 external laser source (ELS) modules plugged in along the top of the switch. Each ESL contains eight lasers, and these lasers provide always-on laser light that gets shared across all 144 ports.

Inside this chassis is where the magic happens:

You can see two liquid-cooled Quantum-X800 switch ASICs, just as you'd find in a non-silicon-photonics variant of the switch. Underneath each heat sink, though, lie six optical sub-assemblies that are attached to the switch ASIC. Each optical sub-assembly is detachable and contains three silicon photonics engines. Each silicon photonics engine connects to eighteen optical fibers: sixteen are data links that connect to the front panel of the switch, and the remaining two are connected to the external laser sources.

Micro-ring modulators (MRMs) are the magical silicon photonics devices embedded in each photonics engine, and they turn the constant laser light from the external laser sources into PAM4 signals that travel down the fibers and out of the switch. By putting this electrical-to-optical conversion so close to the switch ASIC, the 200G signals that comprise the 800G link only remain electrical for a very short distance, making it much easier to keep the signal clean before converting it to optical. This dramatically reduces the power required to transmit: Jensen said that each 800G port only requires 9W of power in the Quantum-X Photonics switch, whereas the pluggable version requires 30W per port.

An added bonus is that the lasers in this switch are no longer inline with the fibers. As a result, when lasers fail (and they frequently fail!), you can pull out an ELS module without touching the optical fibers. This is huge, since MPO12 optical connectors can be extremely sensitive to being disturbed if they're dirty or not seated perfectly. It's not uncommon to experience link flaps (and job failures!) when replacing transceivers just because the act of pulling transceivers can jostle neighboring optical connections to the point where they begin dropping data. Separating the failure-prone lasers and the touchy fibers makes the whole platform much simpler and robust to unintended disruptions during repairs.

Although there are fewer components in these Photonics switches, I didn't hear anything about the expected price difference between the 144-port XDR switch with and without co-packaged optics. Jensen framed the switch to co-packaged optics as a savings of $1,000 per cage since you no longer have to buy transceivers, so it wouldn't surprise me if some of these savings ($72,000 worth of transceivers per switch) are priced into the value of the Quantum-X Photonics switch.

Other themes

Aside from hardware, I also focused on attending sessions and having conversations that revolved around a few interests of mine.

Reduced precision

Despite all the talk about 8-bit and 4-bit floating point precision that came about as both NVIDIA and AMD GPUs announce hardware support for them, I didn't fully appreciate their complexity before attending GTC this year. I had assumed that enabling low-precision arithmetic was just a switch you could flip while training or inferencing to get an instant speedup. I also assumed that if you were reliant on FP64 (as most science applications were), the story was very different: you'd have to be prepared to do unnatural things to make low precision give you good performance and the right answers.

As it turns out, the difficulties of using low-precision arithmetic is way more similar for both AI and HPC communities than I would have ever guessed.

For training

The most eye-opening talk I attended about the realities of low-precision arithmetic was "Stable and Scalable FP8 Deep Learning Training on Blackwell." Contrary to my naïve prior assumption, you can't just cast every model weight from BF16 to FP8 and expect training to just work. Rather, training in such low precision requires figuring out all the places across a neural network where you can get away with low precision, then carefully watching the model as you train to make sure that low precision doesn't wreck your results.

More specifically, training with low precision requires dividing up the entire neural network into regions that are safe to compute with FP16, regions that are safe to compute with FP8, and regions (like softmax) which unsafe for both FP8 and FP16. Scaling factors are used to prevent underflow or overflow of FP8/FP16 by multiplying contiguous regions of low-precision values by a power-of-two constant as they are being computed. One scaling factor is used for an entire low-precision region though, so you can get in trouble if the values within a low-precision range have too much dynamic range--if a tensor cast as FP8 has values that vary by many orders of magnitude, the likelihood of underflow or overflow when applying a scaling factor to that tensor becomes high.

Figuring out how to partition every tensor in a deeply multi-layer language model into safe regions with low dynamic range--and making sure these partitions don't diverge into an unstably high dynamic range as the model trains--is a gnarly problem. To make this easier, NVIDIA ships their Transformer Engine library which implements various "recipes" that safely cast tensors down to FP8 using different strategies, like applying one scaling factor to an entire tensor, to each row, or to finer-grained "sub-channels" (parts of rows or parts of columns).

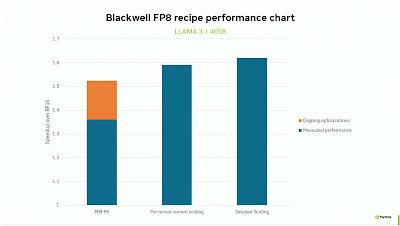

Hopper GPUs do not support fine-grained scaling factor blocks for FP8 in hardware, so benchmarking these different "recipes" on H100 reveals a wide variety of speedups compared to training in 16-bit precision:

Despite the fact that Hopper has 2x the peak FP8 FLOPS than BF16 FLOPS on its spec sheet, the above results show that you don't get nearly 2x performance when training using FP8. Furthermore, the sub-channel partitioning recipe used to train Deepseek (DSv3) yields the worst overall speedup because the fine-grained use of scaling factors had to be implemented inside the matrix multiplication loop by repeatedly multiplying these sub-channel blocks by their respective scaling factors.

This presentation went on to show that Blackwell closes this gap by introducing hardware support for microscaled FP8 (MXFP8), a format in which blocks of 32 values (either in a row or column) share one scaling factor:

The "MXFP8" bar is a reasonably close analog to the "DSv3 subchannel-wise" bar in the H100 plot and shows that it should be possible to get a 50%-60% speedup over training in BF16 by using Blackwell and the right FP8 recipe.

This left me wondering, though: why in the world would you ever use MXFP8 if it's so much worse than the other recipes where you apply just one scaling factor to a much coarser block of values such as a whole tensor?

As it turns out, you don't use MXFP8 because you can, you use it because you have to. When training in FP8, you have to carefully watch for signs of numerical instability--the loss function starting to go crazy, gradient norms jumping up or down dramatically, or values saturating at their precision limits and underflowing or overflowing, resulting in NaNs or Infs everywhere.

So, from what I can tell, actually training in FP8 looks something like this:

- You start by partitioning the model into FP8-safe, FP16-safe, and unsafe regions using as coarse of a granularity of scaling factors as possible to achieve the highest speedup over training only in FP16.

- You train until you start seeing numerical instabilities arising. Then you have to stop training and rewind a bit to undo the damage done by all the overflows and underflows.

- You re-train from an older checkpoint using higher precision to see if your instability goes away. If the instability doesn't occur when using high precision, you know your problem was the result of the dynamic range in your FP8 regions getting too big.

- Switch those FP8 regions with high dynamic range into finer-grained MXFP8 format and resume training. It will be slower than before since MXFP8 isn't as fast as coarser-grained FP8, but hopefully the numerical instability doesn't come back.

I think Transformer Engine helps with steps 1 and 4, but I think steps 2 and 3 require some degree of artistry or slick automation.

After these numerical gymnastics were all laid out, the question of training in FP4 came up from the audience. Although the speaker did say that training in FP4 was a goal, his answer made it sound like there is no clear path to getting there yet. For the time being, FP4 and FP6 just aren't usable for training.

As I left this talk, I couldn't help but think back to my days as a materials science graduate student just trying to make my molecular dynamics simulations go faster. There is no way I would have ever been able to figure out the tricks (or "recipes") needed to make lower-precision arithmetic work for my old Fortran code; I gave up trying lower precision after compiling with -fdefault-real-4 caused my simulation go haywire. If AI training people are having this much trouble getting low precision to work well for them, it's not going to be easier for the poor grad students just trying to get their next paper written.

For science

Although the talk on training using FP8 left me feeling like using low precision to solve scientific problems was hopeless, a few talks from the KAUST linear algebra brain trust offered counterbalance. Their work showed that the work required to make INT8 (and INT1!) work can yield fantastic speedups for traditional HPC.

Both David Keyes and Hatem Ltaief gave talks about their progress in using low-precision matrix operations to solve scientific problems such as calculating Hamming distances (used to compare the similarities between two genomes) and perform maximum likelihood estimation with Cholesky factorization (to model geospatial weather data). Though I have a poor grasp of linear algebra, I did understand the high-level message: using low precision for science is not too different from using low precision for training. It's not enough to just replace every FP64 calculation with an FP16 one, nor is it possible to brute-force emulate the accuracy of FP64 with INT8 every calculation.

Rather, you have to apply domain-specific tricks (recipes, in the AI training parlance?) that approximate problems as matrix-matrix arithmetic with low dynamic range. David Keyes showed how his group achieved 4000x speedups in finding the similarities across DNA sequences by casting that problem as matrix multiplications and exploiting the low dynamic range of element-by-element comparisons:

When comparing DNA sequences, each position is either the same or not which is a natural mapping to a binary INT1 value. So rather than wasting a whole INT8 to store this difference (or worse yet, using an algorithm that relies on FP32 or FP64 vectors), being algorithmically clever yields huge benefits.

Hatem Ltaief gave a related talk in which he pointed out that many matrices in scientific applications have blocks of values that are relatively unimportant, meaning they do not require high-precision arithmetic. Exploiting this where it occurs lets you get speedups that do not come at the cost of accuracy. And, like the FP8 training case, applying low precision with finer granularity lets you realize a higher speedup on GPUs but requires more care. This summary slide he presented captures this, with finer-grained precision games being played in the progression from "business as usual" on the left to the "linear algebraic renaissance" on the right:

As he put it, the key to applying low-precision arithmetic for science is "don't over-solve;" use only as much precision as is required by the problem and the numerics at play. FP64 is a big hammer that's easy to use everywhere, but better results can be had by taking a surgical approach. It seems like the road to future speedups will involve very clever people developing domain-specific recipes for exploiting low precision alongside domain scientists applying those recipes or libraries to their specific applications.

I'll also share a insightful though from a private conversation I had on this topic at GTC: FP64 isn't falling by the wayside because of AI, it's falling by the wayside because of physics. There's no more runway to keep packing FP64 ALUs into chips. AI just happened to come along at the right time to motivate the community to find ways to get past the limits of high-precision arithmetic.

As evidenced by the talk on training in FP8 on Blackwell, it's not like AI is getting a free lunch out of low precision any more than the scientific HPC community is. The overall strategy for making low precision work for science is the same as it is for model training: figure out places where low precision can work with increasingly finer granularity, then generalize those findings into recipes and libraries that are reusable by others. The difference is that the AI community has just accepted that this is the only path forward, whereas half the HPC community would rather cry about AI ruining everything instead.

New storage demands of inferencing

I spent a healthy amount of time hanging around my old pals in storage, including contributing to a breakfast panel with VAST Data on Rethinking AI Training Infrastructure. Though I went into GTC thinking that nobody would care about storage at all, I was very wrong--everyone had an opinion on storage...for inferencing!

Jensen and Ian Buck both reinforced that everyone must use KV caches effectively to keep token generation rates high and the stacks of cash flowing in. Both VAST and WEKA were promoting their solutions that offload KV caches to remote storage (VAST Undivided Attention and WEKA Augmented Memory Grid, respectively) as well; DDN, Pure, and others were less crisp in their messaging, instead focusing on delivering "10x business value" and being "the world's most powerful data storage platform for AI."

Though I got a crash course in the new storage challenges of inferencing throughout the week, the nicest overview came from a session led by CJ Newburn. In it, he proposed that there are fundamentally two types of computational workloads in AI:

- Compute-intensive workloads are the ones most of us are familiar with, where you load a big chunk of data into a GPU, compute on it, spit out an answer, and repeat. They typically operate on big batches of data whose shape and location are known before the GPU kernel launches, and the I/Os are initiated by the CPU as a result. It only takes a few GPU threads to saturate the data path between the GPU and storage for these workloads.

- Data-intensive workloads are different: each thread needs to load a small piece of data, compute on it, and based on the result, load a different piece of data and repeat. The data to be loaded is not known before the GPU kernel launches because that depends on an intermediate result of the computation, so each GPU thread needs to initiate the I/O and fetch tiny pieces of data without any prior knowledge of where that data might need to come from.

Compute-intensive problems are pretty close to typical meat-and-potatoes HPC workloads that do bulk data loads and need lots of bandwidth. The I/O demands of training as I've described them previously also fall squarely into this category. When done right, the PCIe bandwidth between the GPU subsystem and NICs or NVMe drives are the bottleneck, and NVIDIA developed GPUDirect Storage to shorten this data path.

However, data-intensive problems are a horror show to almost every storage subsystem because they amount to a giant IOPS problem. In order to saturate the PCIe bandwidth of a B200 GPU using 512-byte transfers, you'd need around 200 million IOPS; although a GPU subsystem can drive this many IOPS with its tens of thousands of threads, PCIe and NVMe drives cannot keep up, especially if those I/Os have to bounce through multiple PCIe switches. These highly concurrent random accesses present themselves when repeatedly retrieving precomputed key and value vectors (KV) during LLM inferencing.

To solve this GPU-driven IOPS challenge, NVIDIA developed SCADA. It is a client-server runtime that runs in GPUs, and it acts like a multilevel cache to shield the PCIe, CPU, and storage from the full force of 100,000+ GPU threads all trying to read random data from storage. As I understood it, SCADA does this in a few ways:

- It takes full ownership of NVMe block devices and implements an NVMe driver inside the GPU. This keeps random I/Os from having to be processed on the host CPU.

- It enables peer-to-peer PCIe in a way analogous to GPUDirect. This avoids sending I/Os all the way to host memory, and keeps traffic between GPUs and storage local to the PCIe switch they share.

- It coalesces I/O requests within the GPU and maintains a read-through cache, converting random I/Os into either local cache hits within the GPU or batches of I/Os that are packed together before being passed over PCIe to local NVMe or a remote SCADA server.

CJ showed some impressive performance numbers for random I/Os: the SCADA cache processor on the SCADA client can retire I/Os at 150 million 4 KiB IOPS when requested data is in the SCADA cache and process cache misses at 100 MIOPS. The demo app used in his example was a graph neural network that drove 45M IOPS, but I got the impression that SCADA's real strength will come when inferencing. There are a couple places during LLM inferencing where small, random reads have to happen repeatedly; the two mentioned in this talk were:

- KV cache lookups. As the response to your ChatGPT question is being built out word-by-word, the model needs to reference all the previous words in the conversation to decide what comes next. It doesn’t recompute everything from scratch; instead, it looks up cached intermediate results (the key and value vectors) from earlier in the conversation. These lookups involve many small reads from random places each time a new word is generated.

- Vector similarity search. When you upload a document to ChatGPT, the document gets broken into chunks, and each chunk is turned into a vector and stored in a vector index. When you then ask a question, your question is also turned into a vector, and the vector database searches the index to find the most similar chunks--a process that requires comparing the query vector against a bunch of small vectors stored at unpredictable offsets.

Just as GPUDirect Storage has become essential for efficient bulk data loading during training, SCADA is likely to become an essential part for efficiently inferencing in the presence of a lot of context--as is the case when using both RAG and reasoning tokens.

Resilience

Since I work on resilience and reliability for large-scale model training, I made sure to attend as many talks on this topic as possible. However, I found myself underwhelmed by the content being presented, as nothing presented was particularly new or novel. This isn't to say they were bad talks though, and I appreciate that others have started banging the drums about following good practices when training at scale. I just didn't leave GTC with many new ideas on how to deal with the reliability challenges on which I've been working.

The most comprehensive talk I attended on this topic was a ninety minute exposition on a bunch of reliability features being incorporated in the NVIDIA Resilience Extension (NVRx) and NeMo training framework. The speakers offered very clear descriptions of a bunch of resilience techniques for training, including:

- Detecting hung tasks. NVRx launches agents alongside the training processes that listen for heartbeats after every training step and fails the job if one process stops responding.

- Detecting stragglers. Wrapping critical loops in the training script enables tracking each worker's performance relative to both other workers and itself over time. If one GPU straggles consistently relative to its peers, or if one task begins slowing down over time, the straggler detector fails the job.

- Distributed, asynchronous checkpointing. Instead of writing a checkpoint using a single data parallel group, each data parallel group writes a different, non-overlapping part of the model's weights and activations.

- Local checkpointing. I described this in an earlier blog post; the NVIDIA implementation replicates to both local DRAM and a buddy node's local DRAM.

- Silent data corruption detection. NVIDIA's implementation only looks for NaNs and Infs, which is a pretty basic way of looking for these events. Looking for discontinuities in the loss or maintaining parity and redundant computations are much more robust ways this is done in the wild.

- Restart without requeue. This involves requesting more job nodes than you need, then relying on orchestration within the job allocation to swap in these "spare" nodes and remap ranks on job failure.

None of these are new concepts, but it is nice to see NVIDIA implementing them all under one roof (NeMo) so that less sophisticated model trainers don't have to try to implement this all themselves. I was also glad to see NVIDIA stand up and acknowledge that silent data corruptions are a fact of life when training at scale on their GPUs. Nobody likes to talk since silent data corruptions because they arise from problems in the GPU hardware, but having software strategies to detect and mitigate them is essential.

I had a few other observations after this talk:

- I've seen every one of these techniques being applied by my customers for more than two years now, so I was surprised to see NVIDIA only coming around to them now. This speaks to NVIDIA's overall maturity in being able to train large language models themselves, but as I said earlier, NVIDIA isn't a model-making company.

- The scope of NVRx and these resilience features of NeMo are strictly limited to deciding whether the job should be aborted or not. If a straggler or hung task is detected, the job is killed, but then it's someone else's problem to ensure that the cause of the failure is mitigated before the job is re-launched. This is a shame, because automatically stopping a floundering job is only half of the challenge. Automatically mitigating the cause of a failure and automatically restarting the job are both essential to ensure high GPU utilization during training.

- NVIDIA seems very married to Slurm for these features which doesn't make sense to me. The straggler detection and restart-without-requeue features both require running agents alongside the training processes on nodes, and this seems like a natural fit for sidecars in Kubernetes. The restart-without-requeue takes this further and relies on dynamic resource scaling and a bunch of auxiliary services running across job nodes, things which Slurm does not handle well. Again, Kubernetes seems like a much better fit for that type of orchestration.

The other noteworthy resilience-related talk I attended was on "Fault-tolerant managed training" and presented by Crusoe. It opened with some quantitative fault rate data from a 1,600-GPU H200 cluster they have in Iceland:

And with a little estimation, this data suggests they experienced 205 failures during the four-month period shown. Making a few further assumptions, this amounts to a system mean time to interrupt of 14 hours and a mean time to interrupt for a single H200 HGX node of 119 days.

This slide aside though, the talk mostly focused on extolling the virtues of running virtual machines (Crusoe uses Cloud Hypervisor), holding back hot spares from customer-committed capacity on each cluster, and automating failure detection and maintenance. All the big cloud providers have been doing these things since the beginning of time, so they aren't new ideas. But I am glad another cloud provider is preaching this message specifically to the HPC crowd. I often feel that those of us who know how this works just take it for granted, and those in HPC who don't know just assume it doesn't exist.

Scale

The final topic to which I gravitated was scale--scale of deployments, who's deploying what and for whom, and where the trajectory of scale-out is pointing.

Aside from all the "AI Factory" buzz in the keynote, the first scale-centric session I attended at GTC was a panel packed with senior leaders from all the companies deploying GB200 at scale: CoreWeave, Azure, Oracle Cloud, Meta, and Google. With a title like "Lessons from Networking 100K+ GPU AI Data Centers and Clouds," I expected it to be a little zany since, despite what NVIDIA would have you believe, there are very few genuine 100K+ GPU clusters in existence today. And I was not disappointed: towards the end of the session, the panelists were asked about scaling to million-GPU clusters with a straight face.

I guess if such a question is asked with enough gravitas, and the panelists answer it with comparable sincerity, nobody will pause to question whether building million-GPU clusters is even a good idea. Like Jensen's "AI Factories will print money" narrative in the keynote, talking about million-GPU clusters in such a matter-of-fact way could easily convince any investor in the room that they've missed the memory, and NVIDIA is undoubtedly preparing to ship million-GPU clusters left and right. However, as someone who works on some of the largest GPU clusters on the planet, I would argue that the only way we'll see million-GPU clusters in the near future is redefining what a cluster means.

That bit of nuttiness aside, a few interesting trends came up in the panel that are worth repeating:

CSPs and hyperscalers have struggled to come to grips with treating collections of servers as a single, tightly coupled entity. Dan Lenoski from Google likened it to the pets-vs-cattle adage often used in computing; while hyperscale came up treating servers like cattle, AI infrastructure has pet-like qualities. For example, rolling upgrades would minimize overall disruption in a traditional cloud workload, but they result in prolonged disruptions to model training since all nodes operate as a cohesive group when training.

Training across datacenters and campuses is becoming a common practice at the largest scales. Hyperscale AI training clusters no longer fit in individual buildings, so if we are to ever claim victory in training across a million GPUs, multiple geographies and electrical substations will undoubtedly be required.

Training clusters will become obsolete very quickly because the latest frontier models only train on the latest GPUs. Given NVIDIA's release cadence of a new GPU every year, today's training clusters will be tomorrow's inferencing clusters, and the storage, networking, and power infrastructure supporting these clusters must suit both workloads.

Stratification

This last point--and the fact that this panel about 100K+ GPU clusters even happened--painted a picture for me that the market for high-end GPUs is rapidly stratifying into distinct layers.

At the top are the hierarchy are hyperscalers and the frontier model trainers they sponsor. This group was largely represented by the panelists qualified to speak of 100K GPU deployments, and they will always be first in line to deploy the latest GPUs with the largest scale-up domains. These are the only people who are actually deploying GB200 NVL72 today, they'll be deploying GB300 NVL72 in the coming months, and they'll be sloughing off their Blackwells as soon as Rubin becomes available.

The next layer down are the non-hyperscale cloud providers and the production inferencing people. This includes the non-hyperscale GPU-as-a-Service providers deploying one-off clusters for smaller-scale model trainers, fine tuners, and companies who provide inferencing services. I talked to at least two GPUaaS providers who said they aren't in a rush to deploy NVL72 simply because the complexity is too high and the demand from their customers, who are not training frontier models, is too low. Instead, they are currently focused on H200 and DGX B200 NVL8, and probably won't adopt NVL72 for another few years (if ever).

And finally, the poor old scientific computing and the HPC community are at the bottom of the pile, still making use of their Ampere-generation GPUs to publish papers. Even the top-end HPC centers are only beginning to deploy GH200 NVL4 now, while the rest of the AI world--even the non-hyperscale providers--are moving on to B200 NVL8.

Given this separation of GPU users, I wouldn't be surprised if we see GPUs age down through these layers over time just like how, say, top-end iPhones gradually become mainstream and lose their premium value. When a new GPU first launches, hyperscalers will buy up all the highest-performance hardware for frontier model training and leave nothing for the average enterprise. As newer GPUs enter the market though, these older GPUs might then wind up in the hands of inferencing workloads at a cost that reflects their reduced training value. And it'd only be after this inferencing value has been extract that these GPUs become accessible to researchers for development and basic research.

Like I wrote above, a lot of signs are pointing to this happening already: only a few companies had Blackwell results to share at GTC this year, but plenty more were eager to talk about their new H200 clusters. And it was only the poor scientists, working hard to extract big speedups from low-precision arithmetic, that were presenting exciting results from A100 GPUs. I don't know if this is a real trend or just the one that I wanted to see based on the talks I attended, but it is undeniable that the only people talking about NVL72 right now are the biggest players in the field, and the only people talking about A100 aren't focusing on AI.

Final thoughts

There was plenty more to see and do that I completely missed, and there's plenty that I didn't have time to document here--like NVIDIA’s wall of reference platform components, perhaps conveniently arranged to reassure regulators that there’s ample competition in the AI industry.

But cynicism aside, it was a rich week in which a lot of shiny hardware and good ideas were put out in the open in highly accessible ways. Although the narrative was carefully curated throughout the week, a lot of the trends that NVIDIA put forth are ultimately where the field of accelerated computing is going to go: AI is far along enough now that inferencing can generate real revenue. The efficiency with which inferences can be served up will determine which AI companies have a future and which ones are headed towards bankruptcy, and bigger NVLink domains--and 600 kW racks--are ways to improve that efficiency.

As with many of these big conferences, GTC was an excellent chance to reconnect with old colleagues and meet some new ones. I don't often have a chance to sit down and get to know many of my coworkers on account of being a remote worker, so I treasure opportunities to be in the same room (or even the same city!) as my peers. GTC attracts everyone who's serious about AI infrastructure, so on that basis alone, it is a week well spent.

All that being said, I'm still not sure that I would be in a rush to attend GTC again. The intellectual rigor was much thinner than conferences like SC or ISC, but at the same time, the level of market realism present was refreshingly high. I got a fair amount out of attending, but I also put a fair amount in (including paying San Jose hotel prices out of my own pocket!). For everything GTC did worse than SC or ISC, they also did something better. So in the end, it's hard to put a fine point on it, but despite some shortcomings, it's a conference worth experiencing at least once--particularly for the practical grounding in where AI infrastructure is headed.

Even if I don't attend GTC next year, I look forward to seeing where the industry will have moved by then. I'm heartened by the overlaps that emerged between the AI and HPC communities, particularly around high-power rack-scale infrastructure and the techniques required to exploit low-precision arithmetic. Both industries will benefit from cracking these nuts, and if GTC is at all representative, it looks like both researchers and industry are finding ways to drive in the same direction.

%20and%20DGX%20GB200%20(right)%20from%20GTC25.jpeg)