ISC'25 recap

I had the pleasure of attending the 40th annual ISC High Performance conference this month in Hamburg, Germany. It was a delightful way to take the pulse of the high-performance computing community and hear what the top minds in the field are thinking about.

The conference felt a little quieter than usual this year, and there didn't seem to be as many big ideas and bold claims as in years past. There was a new Top 10 system announced, but it was built using previous-generation Hopper GPUs. There were a record number of exhibitors, but many of the big ones (Intel, AMD; the big three cloud providers) were all absent. And while there were some exciting new technologies (like AMD MI350-series GPUs and Ultra Ethernet v1.0) debuting during the week, they actually debuted elsewhere and were simply referenced throughout the week's talks.

This year's ISC really felt like the place where the big news of the industry was being repeated in the context of scientific computing instead of being stated for the first time. And maybe this is the future of HPC conferences: rather than being where new technology is announced, perhaps ISC will become where the scientific community tries to figure out how they can use others' technology to solve problems. That idea--figuring out how to make use of whatever the AI industry is releasing--was certainly pervasive throughout the ISC program this year. The conference's theme of "connecting the dots" felt very appropriate as a result; rather than defining new dots, the conference was all about trying to make sense of the dots that have already been drawn.

I took plenty of notes to try to keep track of everything that was being discussed, and as has become tradition, I've tried to summarize some of the key themes in this post.

Table of contents

- Zettascale

- Ozaki, Ozaki, Ozaki

- Top500

- HPC around the world

- Exhibitors

- Cloud, or lack thereof

- Parting thoughts

Zettascale

Now that exascale is squarely in the rear-view mirror of HPC, an increasing number of high-profile speakers began pushing on zettascale as the next major milestone. Like the early days of exascale, most of the discourse was less about what can be achieved with zettascale and more about the technology challenges that need to be surmounted for HPC to continue moving forward. And to that end, using zettascale to justify tackling big hardware and software challenges wasn't a bad thing, but it felt like every talk about zettascale this year was still more fanciful than anything else.

The opening keynote, "HPC and Al - A Path Towards Sustainable Innovation" was delivered by a duo of CTOs: Mark Papermaster (of AMD) and Scott Atchley (of Oak Ridge Leadership Computing Facility). It was a textbook keynote: it had inspiring plots going up and to the right that showed huge potential! It had scary linear extrapolations showing that staying the course won't do! It had amazing science results enabled by big iron! It even had a surprise product debut in MI355X! ChatGPT couldn't have come up with a better structure for a keynote presentation. But as is my wont, I listened to the talk with a little skepticism and found myself raising an eyebrow a few times.

A part of Papermaster's presentation involved an extrapolation to zettascale by 2035 and claimed that HPC is approaching an "energy wall:"

He specifically said that we'd need 1 GW per supercomputer to reach zettascale by 2035 on the current trajectory. He then used this to motivate "holistic co-design" as the only way to reach zettascale, and he went on to talk about all the same things we heard about leading up to exascale: increase locality and integration to reduce power and increase performance.

While I agree that we should aspire to do better than a gigawatt datacenter, this notion that there is an "energy wall" that stands between us and zettascale is a bit farcical; there's nothing special about a 1 GW zettascale supercomputer, just like there was nothing special about 20 MW for exascale. You might argue that building a supercomputer that consumes all the power of a nuclear reactor might be fundamentally more difficult than one that consumes only 20 MW, and you'd be right--which is why the first gigawatt supercomputers probably aren't going to look like the supercomputers of today.

Papermaster's "energy wall" slide reminded me of the great horse manure crisis of 1984, where people extrapolated from today using an evolutionary, not revolutionary, trajectory. If building a single gigawatt supercomputer is inconceivable, then build four 250 MW supercomputers and put a really fast network between them to support a single, synchronous job. The AI industry is already headed down this road; Google, Microsoft, and OpenAI have already talked about how they synchronously train across multiple supercomputers, and Microsoft announced their 400 Tb/s "AI WAN" for this last month as a means to enabling wide-area training.

Granted, it's unlikely that the HPC community will be building massive, distributed supercomputers the way hyperscale is. But I was disappointed that the keynote only went as far as saying "a gigawatt supercomputer is crazy, so we need codesign at the node/rack scale." Codesign to reach zettascale will probably require a whole new approach that, for example, accounts for algorithms that synchronize communication across multiple datacenters and power plants. The infrastructure for that is already forming, with the US developing its Integrated Research Infrastructure (IRI) and Europe shaping up to have over a dozen AI factories. Zettascale by 2035 may very well exist for the scientific computing community, but it'll probably look a lot more like hyperscale zettascale rather than a single massive building. A single machine plugged into a gigawatt nuclear reactor only happens if business-as-usual is extrapolated out another ten years as Papermaster did, and the codesign required to achieve that isn't very meaningful.

Prof. Satoshi Matsuoka also gave a talk on the big stage about Fugaku-NEXT, which Japan has branded as a zettascale system. His vision, which will be realized before 2030, aims to deploy a single, 40 MW supercomputer (much like Fugaku was) where:

- 10x-20x speedup comes from hardware improvements

- 2x-8x speedup comes from mixed precision or emulation (more on this below)

- 10x-25x speedup comes from surrogate models or physics-informed neural networks

The net result is a 200x-4000x speedup over Fugaku. His rationale is that this will result in a system that is effectively equivalent to somewhere between 88 EF and 1.7 ZF FP64. It's not literally doing that many calculations per second, but the science outcomes are equivalent to a brute-force approach using a much larger system.

I thought this approach to reaching zettascale was much more realistic than the Papermaster approach, but it does require the scientific computing community to redefine its metrics of success. If HPL was a bad benchmark for exascale, it is irrelevant to zettascale since it's unlikely that anyone will ever run HPL on a zettascale system. At best, we'll probably see something like HPL-MxP that captures the 10x-20x hardware speedup and the 2x-8x mixed-precision or emulated FP64 reach hundreds of exaflops, but the 10x-25x from surrogate models will be domain-specific and defy simplistic ranking. If I had to guess, the first zettascale systems will be benchmarked through Gordon Bell prize papers that say things like "simulating this result using conventional FP64 would have required over 1 ZF for 24 hours."

Ozaki, Ozaki, Ozaki

Although Prof. Matsuoka evoked the 2x-8x speedup from mixed precision or emulation when claiming Fugaku-NEXT would be zettascale, he was far from the only speaker to talk about mixed precision and emulation. In fact, it seemed like everyone wanted to talk about emulating FP64, specifically using NVIDIA's low-precision tensor cores and the Ozaki scheme (or its derivatives). By the end of the week, I was absolutely sick of hearing about Ozaki.

For the unindoctrinated, this Ozaki scheme (and similar methods with less-catchy names) is a way to emulate matrix-matrix multiplications at high precision using low-precision matrix operations. It's become so hot because, despite requiring more arithmetic operations than a DGEMM implemented using WMMA/MFMA instructions, it can crank out a ton of FP64-equivalent operations per unit time. This is a result of the ridiculously nonlinear increases in throughput of low-precision tensor/matrix cores on modern GPUs; for example, Blackwell GPUs can perform over 100x more 8-bit ops than 64-bit ops despite being being only 8x smaller. As a result, you can burn a ton of 8-bit ops to emulate a single 64-bit matrix operation and still realize a significant net speedup over hardware-native FP64. Matsuoka presented the following slide to illustrate that:

Emulation offers a way for scientific apps that need high-precision arithmetic to directly use AI-optimized accelerators that lack FP64 in hardware, so it's worth talking about at conferences like ISC. But it seems like everyone wanted to name-drop Ozaki, and the actual discussion around emulation was generally a rehash of content presented earlier in the year at conferences like GTC25.

While hearing about FP64 emulation and Ozaki schemes got tiring throughout the week, I had to remind myself that I hadn't even heard about Ozaki before September 2024 at the Smoky Mountains Conference. The fact that the Ozaki scheme went from relative algorithmic obscurity to being the star of the show in nine months is either a reflection of its incredible importance in scientific computing or a testament to the reach of NVIDIA's marketing.

Cynically, I'll bet that NVIDIA is probably doing everything it can to make sure the world knows about the Ozaki scheme, and ISC was a part of that. When the datasheets for Rubin GPUs are released, I'll bet the performance table has a row claiming a bazillion FP64 FLOPS, and there will be a tiny footnote that clarifies they're citing emulated FP64 precision. They did it with structured sparsity, and I'm sure they'll do it for emulated DGEMM.

Although the Ozaki scheme is perhaps over-hyped considering how narrow its applicability is to the broad range of compute primitives used in scientific computing, I do anticipate that it is the tip of the iceberg. If 2025 was the year of the Ozaki scheme, 2026 may be the year of the emulated FP64 version of FFTs, sparse solvers, stencils, or other key algorithms. We're seeing signs of that already; David Keyes and Hatem Ltaief both presented material at ISC on using mixed-precision matrix operations for other scientific problems, and I mentioned their work in my earlier GTC25 blog. I'm not sure "the Keyes scheme" or "the Ltaief scheme" is as catchy as "the Ozaki scheme," but I expect to hear more about these other emulation techniques before ISC26.

Top500

On the topic of matrix-matrix multiplication, I can't get too much farther without talking about the Top500 list released at ISC. Although there was no new #1 system, Europe's first exascale system, JUPITER, made its sub-exascale debut. There were also a number of new entries in Top50, and surprisingly, many of them came from companies who offer GPUs-as-a-Service for AI training rather than the usual public-sector sites delivering cycles for scientific research. However, all the new entries were still using previous-generation Hopper GPUs despite huge Blackwell coming online, exposing a perceptible lag between the state of the art in supercomputers for AI and traditional HPC.

As with last year, I felt a growing tension between what the Top500 list brings to the discussion and where the large-scale supercomputing industry is headed. As I wrote earlier, mixed-precision and emulated FP64 was a hot topic in the technical program, but the emphasis of the Top500 session was still squarely on bulk-synchronous FP64 performance. HPL-MxP awards were handed out, but they all wound up in the hands of systems who were also at the top of the regular HPL list. Nobody is submitting HPL-MxP-only scores, and there was no meaningful discussion about the role that the Ozaki scheme will play going forward in Top500's future.

Opining about the long-term future of the Top500 list is a whole separate blog post though, so I'll focus more on what was covered at this year's session.

JUPITER

JUPITER was the only new entrant into the Top 10, and it posted at #4 with an average 793 PF over a hundred-minute run. Though it hasn't broken the 1 EF barrier yet, JUPITER is noteworthy for a few reasons:

- It is expected to be Europe's first exascale system. Given this HPL run used only 79% of the Booster Module's 5,884 GH200 nodes, some basic extrapolation puts the full-system run just a hair above 1 EF. Jülich will either have to run with 100% node availability or get a few extra nodes to exceed 1 EF though.

- JUPITER is also now the biggest NVIDIA-based supercomputer on Top500, pushing Microsoft's H100 SXM5 system (Eagle) down to #5. JUPITER is also Eviden's biggest system and a strong affirmation that Europe isn't dependent on HPE/Cray to deliver on-prem systems of this scale.

JUPITER was also installed into a modular datacenter, an approach that is emerging as a preferred method for rapidly deploying large GPU systems in Europe. This setup allowed Jülich to place shipping container-like modules on a concrete foundation in just a few months. However, because the datacenter is form-fit to the JUPITER system without much extra space, it's impossible to take a glamor shot of the entire machine from far away. As a result, most photos of JUPITER show only the datacenter modules that wrap the supercomputer racks. For example, Prof. Thomas Lippert shared this photo of JUPITER during his presentation:

As Lippert was describing JUPITER, I couldn't help but compare it to the AI supercomputers I support at my day job. Like JUPITER, our supercomputers (like Eagle) aren't very photogenic because they're crammed into form-fitted buildings, and they are best photographed from the sky rather than the ground. For example, here's a photo of one of Microsoft's big GB200 supercomputers that I presented later in the week:

JUPITER may be the first exascale system listed on Top500 that doesn't have fancy rack graphics, but I don't think it will be the last.

I also found myself wondering if these modular datacenters are trading short-term upsides with long-term downsides. While they accelerate deployment time for one-off supercomputers, it wasn't clear to me if these modular structures is reusable. Does the entire datacenter retire along with JUPITER after 5-7 years?

Hyperscalers use modular datacenters too, but the modularity is more coarse-grained to support a wider variety of systems over multiple decades. They're also physically more capacious, allowing them to deploy more CDUs and transformers per rack or row to retrofit them for whatever power and cooling demands evolve into over the full depreciation life of the datacenter building.

HPC-AI system intersection

As with last year, Erich Strohmeier did a walkthrough of Top500 highlights, and he argued that "hyperscale" is defined as anything bigger than 50 MW, and therefore the Top500 list is hyperscale. It wasn't clear what value there was in trying to tie the Top500 list to hyperscale in this way, but there were a few ways in which Top500 is beginning to intersect with hyperscale AI.

Foremost is the way in which some exascale systems have been appearing on the list: they first appear after HPL is run on a big but partially deployed machine, then six months later, the full-system run is listed. Aurora and JUPITER both follow this pattern. What's not obvious is that many massive AI supercomputers also do something like this; for example, the Eagle system's 561 PF run was analogous to Aurora's initial 585 PF run or JUPITER's 793 PF run. The difference is that systems like Eagle typically enter production training after that first big tranche of GPUs is online, so there is never an opportunity to run HPL as more of the system powers up. Instead, the production training job simply expands to consume all the new GPUs as new tranches come online.

This iteration of the Top500 list also saw a number of bona fide commercial AI training clusters from smaller GPU-as-a-Service and "AI factory" providers post results, giving the public a view of what these systems actually look like:

- Nebius listed ISEG2 at #13 with a 624-node, 202 PF H200 SXM cluster, following their 2023 Top500 debut with a 190-node, 46 PF H100 SXM cluster. Nebius was spun out of Yandex, the Russian tech conglomerate.

- Northern Data Group debuted Njoerd at #26 with a 244-node H100 SXM cluster. Northern Data Group started out as a German bitcoin mining company.

- FPT debuted at #36 with a 127-node H200 SXM cluster and #38 with a 127-node H100 SXM cluster. FPT is a Vietnamese technology conglomerate.

It's notable that none of these systems resemble the sovereign AI systems or EuroHPC AI Factories cropping up in Europe, which are attached to traditional HPC centers and built on familiar HPC platforms like Cray EX or BullSequana. Rather, they're essentially NVIDIA reference architectures that resemble DGX SuperPods but are stamped out by companies like Supermicro, Gigabyte, and ASUS.

While it's nice of these GPU-as-a-Service companies to participate in the Top500 list, I did not see anyone from these companies in the technical program in any other way. And I did not see anyone from the bigger GPU-as-a-Service providers (CoreWeave, Crusoe, Lambda, etc) contributing either. Thus, while these companies are participating in Top500, it doesn't seem like they're genuinely interested in being a part of the HPC community.

Other new entrants

If you take a step back and look at the ten largest systems that made their debut at ISC'25, they broadly divide into two categories. Here's the list:

| Rank | System | Platform | Site |

|---|---|---|---|

| 4 | JUPITER Booster | GH200 | Jülich |

| 11 | Isambard-AI phase 2 | GH200 | Bristol |

| 13 | ISEG2 | H200 SXM5 | Nebius |

| 15 | ABCI 3.0 | H200 SXM5 | AIST |

| 17 | Discovery 6 | GH200 | ExxonMobil |

| 18 | SSC-24 | H100 SXM5 | Samsung |

| 26 | Njoerd | H100 SXM5 | Northern Data Group |

| 27 | ABCI-Q | H100 SXM5 | AIST |

| 33 | AI-03 | MI210 | Core42 |

| 36 | FPT AI Factory Japan | H200 SXM5 | FPT |

Aside from Core42's weird MI210 cluster, every new big system was either GH200 (for traditional HPC) or H100/H200 SXM5 (for AI). This suggests a few interesting things:

- None of the AI cloud/GPUaaS providers are talking about GH200. It seems that GH200 is squarely for scientific computing, and Hopper HGX systems is preferred for AI at scale.

- Despite debuting on Top500 two years ago, H100 is still making its way into the hands of HPC and AI sites. This could mean one of several things:

- H100 is more affordable now (Jensen says he can't give them away),

- there was a huge backlog of H100 orders, or

- it's just taking some places a really long time to get H100 up and running

- Blackwell is not relevant to HPC right now. There are no big Blackwell systems on this list, nor was Blackwell discussed in any sessions I attended during the week. This is despite large GB200 systems being public, up, and benchmarked. For example, CoreWeave, IBM, and NVIDIA ran MLPerf Training across 39 racks (624 nodes) of a GB200 NVL72 system named Carina just last month. They did not appear to bother with HPL, though.

From all this, it seems like there is a definite lag forming between what qualifies as "leadership computing" to HPC people and AI people. Today's leadership HPC (Hopper GPUs) is yesterday's leadership AI, and today's leadership AI (Blackwell GPUs) isn't on the radar of leadership HPC yet. Maybe GB200 will begin appearing one or two years later as the AI people move on to Vera-Rubin.

So, if I had to guess, I think the top-end of Top500 in 2027 could look like one of three things:

- It will contain HPC systems with state-of-the-art, HPC-specific variants of accelerators that are completely irrelevant to AI. Large AI training systems will simply disappear from the list, because HPL has ceased to be a meaningful measure of their capability. GB200/GB300 simply never appear on Top500.

- It will contain HPC systems with previous-generation Blackwell accelerators after Jensen (the chief revenue destroyer) gets on stage and tells the world that Blackwell is junk because Rubin is awesome. The AI industry gobbles up all the Rubin GPUs, and HPC picks up the scraps they leave behind.

- Top500 starts allowing FP64 emulation, and all bets are off on how ridiculous the top systems' numbers look. In this case, top systems just skip the 1-10 exaflops range and start debuting at tens of exaflops.

I have no idea where things will go, but we're starting to see big HPC deals targeting Vera Rubin that line up with the same time Rubin will land for the AI industry in 2H2026. So maybe Blackwell is just a hiccup, and option #1 is the most likely outcome.

HPC around the world

Though Blackwell's absence from Top500 was easy to overlook, China's continued absence was much more obvious. Even though no new Chinese systems have been listed in a few years now though, representatives from several Chinese supercomputing centers still contributed invited talks throughout the week.

In that context, I appreciated how fully ISC embraces its international scope. I found myself attending a lot of "HPC Around the World" track sessions this year, partly because I work for a multinational corporation and have to stay aware of potential needs outside of the usual US landscape. But there's also been a sharp rise in the amount of serious HPC that is now occurring outside of the USA under the banner of "sovereign AI," and I've been keen to understand how "sovereign AI" compares to the US-based AI infrastructure in which I work.

Before getting too deep into that though, China is worth discussing on its own since they had a such prominent presence in the ISC program this year.

HPC in China

Following the single-track opening keynote on the first day of ISC is the single-track Jack Dongarra Early Career Award Lecture, and this year's talk was given by awardee Prof. Lin Gan from Tsinghua University. In addition, Dr. Yutong Lu gave two separate talks--including the closing keynote--which shed light on the similarities and differences between how China and the US/Europe are tackling the challenges of exascale and beyond.

China is in a position where it does not have access to US-made GPUs, forcing them to develop their own home-grown processors and accelerators to meet their needs for leadership computing. As a result, both speakers gave talks that (refreshingly) revolved around non-GPU technologies as the basis for exascale supercomputers. Although neither Gan nor Lu revealed anything that wasn't already written about in the Gordon Bell prize papers, I took away a few noteworthy observations:

The most public Chinese exascale system is always called the "New Sunway" or "Next Generation Sunway," never "OceanLight" as has been reported in western media. There still aren't any photos of the machine either, and Dr. Gan used stock diagrams of the predecessor Sunway TaihuLight to represent New Sunway. There was no mention of the Tianhe Xingyi/TH-3 supercomputer at all.

Chinese leadership computing details remain deliberately obfuscated despite the openness to present at ISC. For example, Lu presented the following English-language table from the 2024 China Top100 HPC list:

| No. | Vendor | System | Site | Year | Application | CPU Cores | Linpack (Tflops) | Peak (Tflops) | Efficiency (%) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Server Provider | Supercomputing system mainframe system, heterogeneous many-core processor | Supercomputing Center | 2023 | computing service | 15,974,400 | 487,540 | 620,000 | 78.7 |

| 2 | Server Provider | Internet Company Mainframe System, CPU+GPU heterogeneous many-core processor | Internet company | 2022 | computing service | 460,000 | 208,260 | 390,000 | 53.4 |

| 3 | Server Provider | Internet Company Mainframe System, CPU+GPU heterogeneous many-core processor | Internet company | 2021 | computing service | 285,000 | 125,040 | 240,000 | 52.1 |

| 4 | NRCPC | Sunway TaihuLight, 40960*Sunway SW26010 260C 1.45GHz, customized interconnection | NSCC-WX | 2016 | supercomputing center | 10,649,600 | 93,015 | 125,436 | 74.2 |

| 5 | Server Provider | Internet Company Mainframe System, CPU+GPU heterogeneous many-core processor | Internet company | 2021 | computing service | 190,000 | 87,040 | 160,000 | 51.2 |

| 6 | NUDT | Tianhe-2A, TH-IVB-MTX Cluster + 35584*Intel Xeon E5-2692v2 12C 2.2GHz + 35584 Matrix-2000, TH Express-2 | NSCC-GZ | 2017 | supercomputing center | 427,008 | 61,445 | 100,679 | 61.0 |

| 7 | Server Provider | Internet Company Mainframe System, CPU+GPU heterogeneous many-core processor | Internet company | 2021 | computing service | 120,000 | 55,880 | 110,000 | 50.8 |

| 8 | Server Provider | ShenweiJing Supercomputer System, 1024*SW26010Pro heterogeneous many-core processor 390C MPE 2.1 GHz | Computing Company | 2022 | scientific computing | 399,360 | 12,912 | 14,362 | 89.9 |

| 9 | Server Provider | Supercomputing Center System, 992*SW26010Pro heterogeneous many-core processor 390C MPE 2.1 GHz | Supercomputing Center | 2021 | scientific computing | 386,880 | 12,569 | 13,913.0 | 90.3 |

| 10 | BSCCC/Intel | BSCCC T6 Section 5360*Intel Xeon Platinum 9242 homogeneous many-core processor 48C 2.3 GHz, EDR | BSCCC | 2021 | computing service | 257,280 | 10,837 | 18,935.0 | 57.2 |

The #1 system is almost definitely built on SW26010P processors just like the big New Sunway system that Gan discussed (15,974,400 cores / 390 cores per SW26010P = 40,960 nodes), but it's significantly smaller than the 39M cores on which the work Gan highlighted was run. Clearly, China's biggest systems aren't on their own Top100 list, and their #1 listed system only says its processors are "heterogeneous many-core" despite smaller entries explicitly listing SW26010P (Pro) processors.

Chinese leadership computing struggles aren't being hidden. Lu specifically called out a "lack of a new system" in 2024, echoing earlier sentiments from other leaders in Chinese HPC who have referred to "some difficulties in recent years" and a "cold winter" of HPC. She also said that their leadership systems are "relatively" stable rather than trying to overstate the greatness of Chinese HPC technology. But as with above, she didn't get into specifics; by comparison, Scott Atchley (of Oak Ridge Leadership Computing Facility) specifically quoted a 10-12 hour mean time between job interrupt on Frontier after his keynote. Whether 10-12 hours is "relatively stable" remained unspoken.

Performance portability wasn't a top-line concern despite how hard it seems to port applications to Chinese accelerators. SW26010P is weird in that it has a host core and offload cores with scratchpads, and its native programming model (Athread) is very CUDA-like as a result. Gan made it seem that China is investing a lot of effort into "fine-grained optimizations" using OpenACC and Athread, and he showed all the ways in which they're rewriting a lot of the kernels and decompositions in complex applications (like CAM) to make this work. This sounds like an performance portability nightmare, yet there wasn't much talk about Chinese equivalents to performance portability frameworks like Kokkos, RAJA, or alpaka.

Lu did name-drop a few frameworks that unify HPC and AI performance portability from around the world:

However, these were more about aligning efforts across scientific computing and AI rather than enabling scientific apps to run seamlessly across China's different exascale accelerators.

Application focus areas in China seem similar to everywhere else. Classical and quantum materials modeling, climate and ocean modeling, electronic structure calculations, and genomics were all mentioned by Gan and Lu in their talks. There was no mention of stockpile stewardship or any defense-related applications of HPC, though I'm sure China is using big supercomputers in these efforts just as US and European nations do. The only unusual application that I noticed was Gan's mention of implementing reverse time migration (RTM) on FPGAs; I've only ever heard of RTM in the context of oil exploration. Though I'm no expert, I didn't think many HPC centers spent a lot of time focusing on that technique. I do know KAUST has done some work optimizing RTM applications with Aramco in the space, but most other national supercomputing centers keep oil and gas at arm's length. Gan's RTM work may be related to earthquake modeling rather than petroleum, but it stood out nonetheless.

Nobody talked about GPUs. Gan spent a healthy amount of time talking about applying FPGAs and NPUs to scientific problems, but these are areas of research that are on the fringes of mainstream HPC. I'm not sure if this reflected his own interests or priority research directions in China, but given that Chinese researchers cannot procure NVIDIA or AMD GPUs, perhaps FPGAs and NPUs are being pursued as a potential next-best-thing. Necessity truly is the mother of invention, and China might be the driver of a disproportionate amount of innovation around dataflow processing and reduced precision for modeling and simulation workloads.

Nobody talked about storage either. I'm not sure if this suggests China has a lopsided interest in compute over holistic system design, or if they just talked about their biggest challenges (which are using home-grown accelerators productively). Granted, keynote speakers rarely talk about storage, but I didn't see much participation from China in any of the subsystem-specific sessions I attended either. This is particularly notable since, for a time, Chinese research labs were dominating the IO500 list with their home-made file systems. Networking was mentioned in passing in Lu's closing keynote, but not much beyond another example of technology fragmentation, and there were no specific Chinese interconnects being discussed during the week.

China is in the thick of AI just like the rest of the world. Lu said that 30% of the cycles on their big HPC systems go to AI, which is right in line with anecdotes from other HPC sites that put their figures at somewhere up to 50%. She also presented the Chinese taxonomy of the three ways in which AI and scientific computing can mesh together: HPC for AI (training LLMs on supercomputers), HPC by AI (AI for system design and operations), and HPC and AI (AI in the loop with simulation). China is also neck-deep in figuring out how to exploit reduced precision (or "intelligent computing," as Lu branded it) and has pivoted from being "performance driven" (which I took to mean HPL-driven) to "target driven" (which I took to mean scientific outcome-driven). This is consistent with their recent Gordon Bell prize win and non-participation in either Top500 or China Top100.

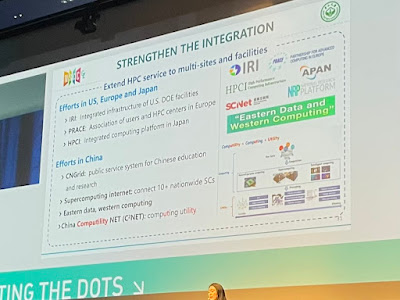

China is embracing geo-distributed supercomputing and complex workflows, much like the US. Lu specifically called out "Computility Net," a catchy name that sounded a lot like the US DOE's Integrated Research Infrastructure (IRI). She described it as a national effort to combine supercomputing with "commodity IT" resources (perhaps Chinese cloud?) to enable "resource sharing" through a "service grid." In her closing keynote, she even name-dropped IRI:

She did liken Computility to both IRI in the US and PRACE in the EU though, and in my mind, PRACE is nothing like IRI. Rather, PRACE is more like TeraGrid/XSEDE/ACCESS in that it federates access to HPC systems across different institutions, whereas IRI's ambition is to tightly integrate computational and experimental facilities around the country. But from the above slide, it sounds like Computility Net is closer to IRI since it is coupled to "Supercomputing internet" (akin to ESnet?) and bridging compute and data across eastern and western China.

Elsewhere in Asia

Although Chinese researchers headlined a few sessions at ISC, a number of other Asian nations presented their national supercomputing strategies as well. Japan and Korea have mature, world-class HPC programs, but I was surprised to see how ambitious India has become to catch up. Smaller nations were also represented, but it was clear to me that their focus is spread across midrange HPC, partnering with large centers in Korea/Japan, and innovating around the edges of supercomputing. And perhaps unsurprisingly, every nation represented had a story around both quantum computing and artificial intelligence regardless of how modest their production modsim infrastructure was.

India appears to rapidly catching up to the US, Europe, and Japan much in the same way China was fifteen years ago. Representatives from C-DAC, the R&D organization that owns the national supercomputing mission in India, gave a far-reaching presentation about India's ambition to achieve exascale by 2030. Their current strategy appears to be broad and capacity-oriented, with forty petascale clusters spread across India for academic, industrial, and domain-specific research. They have a comprehensive, if generic, strategy that involves international collaboration in some regards, reliance on open-source software to fill out their HPC environment story, and home-grown hardware and infrastructure:

I was surprised to hear about their ambitions to deploy their own CPUs and interconnect though. India is pursuing both ARM and RISC-V for their own CPUs for a future 200 PF system, and they're already deploying their "InfiniBand-like" interconnect, TRINETRA, which uses funny NICs with 6x100G ports or 10x200G ports rather than fewer, faster serdes. I didn't hear mention of their AI acceleration plans, but rolling their own commercialized CPU and interconnect in itself is a lot to bite off. Given that India is the world's fastest growing economy though, these plans to go from 20 PF in 2025 to 1 EF in 2030 may not be that far-fetched. Perhaps the Indian national strategy will become clearer during the inaugural Supercomputing India 2025 conferece this December.

The Korea Institute of Science and Technology Information also took the stage to describe their next national supercomputer, KISTI-6, which was first announced in May 2025. It will be a 588 PF Cray EX254n system with 2,084 nodes of GH200, similar to Alps and Isambard-AI. This is quite a step up from its predecessor, which was an air-cooled KNL system, but it's unlikely it will unseat Fugaku; the 588 PF number cited appears to be the sum of 2,084 GH200 nodes, 800 Turin CPU nodes, and 20 H200 SXM5 nodes. The HPL score of its GH200 nodes will place it below Alps and somewhere around 350 PF, likely joining a flood of multi-hundred-petaflops GH200 systems that will appear between now and ISC26.

Singapore (NSCC) and Taiwan (NCHC) both presented their national programs as well, but they appear to be much more nascent, and the size of their HPC infrastructure was presented as aggregate capacity, not capability. Their strategies involve partnership with Japan or Korea, but both had specific carveouts for both sovereign AI and quantum computing. Interestingly, their use cases for AI both had a strong story about training models that understood the diversity of languages and dialects represented in their nations. For example, it is not unusual for people to switch languages or dialects mid-sentence in Singapore, and the big Western models aren't designed for that reality. Similarly, Taiwan has 16 indigenous tribes with 42 dialects. It seemed like enabling LLMs that reflect the breadth languages used in Singapore and Taiwan have become the responsibility of these nations' respective national supercomputing efforts.

That said, that noble mission didn't seem to be matched with substantial training infrastructure; these localized models will be relying on a couple hundred GPUs here and there, wedged into existing HPC centers. Thus, these sovereign models are probably going to be fine-tuned variants of open models, aligning with my earlier observation that these smaller nations will be innovating around the edges of HPC and AI.

What was missing? Although Vietnam, Thailand, Malaysia, and other Asian nations have strong HPC programs centered around industrial uses, they were not represented in ISC's HPC Around the World track. Also absent was any meaningful discussion around cloud; while everyone had a throwaway line about cloud in their presentations, the fact that the only big clouds in Asia are Chinese and American probably makes it unappealing to integrate them into the core of these nations' national HPC strategies. Speaking from experience, this is quite different from the attitudes of commercial HPC users across Asia who are all too happy to let someone else run HPC datacenters for them.

The Middle East

Although KAUST has been a world-class HPC center in the Middle East for the past fifteen years, AI seems to be where the majority of new investment into HPC is going.

In describing new efforts in Saudi Arabia, Prof. David Keyes casually mentioned the Saudi HUMAIN effort, which will build 500 MW of datacenter capacity and 18,000 GB300 GPUs, after describing the Shaheen-3 GH200 upgrade that "might (barely)" put it back in the Top20 by SC'25. Similarly, Dr. Horst Simon walked through a few of Abu Dhabi's university clusters (each having dozens of GPU nodes) after skating through an announcement that a 5 GW AI campus was also being built in Abu Dhabi. The gap between investment in AI and investment in HPC was striking.

I also had a brief conversation with someone from one of the major Abu Dhabi universities, and I was very surprised to find that I was talking to a real AI practitioner--not an HPC person moonlighting in AI--who spoke at the same depth as the customers with whom I work in my day job. The nature of his work made it clear to me that, despite his university not having a Top500 system, he was familiar with running training and inference at scales and with sophistication that is far beyond the experience of most ISC attendees.

These interactions led me to the conclusion that the Middle East's approach to "sovereign AI" is quite different from Europe's. Rather than building HPC systems with GPUs, letting HPC centers operate them, and calling them sovereign AI platforms, nations like Saudi Arabia and UAE are keeping HPC and AI separate. Like in the US, they are going straight to hyperscale with AI, and they have no preconceived notion that anything resembling a supercomputer must be hosted at a supercomputer center.

Of course, only nations like Saudi Arabia and UAE can afford to do this, because they have trillion-dollar sovereign wealth funds to invest in massive infrastructure buildout that doesn't isn't contingent on public consensus or the latest election cycle. Just as UAE's Core42 can build a 5 GW datacenter campus with little oversight, these nations can easily mis-step and invest a ton of money in an AI technology that turns out to be a flop. In the end, it seems like these Middle Eastern nations are willing to take bigger risks in how they build out their sovereign AI infrastructure, because they are largely starting from a blank sheet of paper. They aren't limiting themselves to 20 MW supercomputers like the HPC world had.

All things being equal, this might turn out to be an advantage over other nations who are more hesitant to deviate from the tried-and-true course of buying a Cray or a Bull, sticking some GPUs in it, and calling it AI. If these Middle Eastern nations do everything right, they stand to get a lot further and move a lot faster in sovereign AI than Europe, and it'll be fascinating to see how quickly they catch up with the sort of frontier AI research being done private industry. But, as with the US AI industry, it doesn't seem like these AI practitioners are going to be attending ISC in the same way European sovereign AI folks do; the roads of HPC and AI seem to run parallel without intersecting in the Middle East.

Exhibitors

ISC had a record number of exhibitors this year, and as usual, I tried to set aside at least an hour or two to walk the floor and see what technologies are on the horizon. This year, though, the exhibit hall was not a great representation of the rest of the conference. Everyone I talked to about the exhibit said one of two things:

- There are a LOT of quantum companies.

- A lot of big companies were noticeably absent.

It also didn't feel like the biggest exhibit ever, partially because of #2, and partially because many of the exhibitors--one in five--was exhibiting for the first time this year. This meant a lot of the booths were small and barebones, and many of them belonged to either companies at the periphery of HPC (such as companies that make dripless couplers for liquid cooling) or small startups who just had a desk, a few pens, and some brochures.

On the first point, it was true--quantum computing was well represented, with 22% of exhibitors identifying as being involved in the field in some form. In fact, quantum felt over-represented, since the ISC technical program certainly didn't have such a large fraction of talks on quantum computing topics. I didn't have time to actually talk with any of these quantum companies though, so wasn't able to get a sense of why the startup ecosystem around quantum computing was so rich in Europe as compared to the US.

While there was an abundance of quantum this year, a number of the big HPC and HPC-adjacent companies were noticeably absent:

- Amazon, Azure, and Google did not have booths despite having booths last year. Amazon and Google still sponsored the conference at the lowest tier (bronze) though, while Microsoft did not sponsor at all.

- Intel had neither booth nor sponsorship despite having the #3 system on Top500. I don't think they held a party this year, either. AMD didn't have a booth, but they sponsored (and gave the opening keynote!)

- WEKA neither had a booth nor sponsored the conference this year, although they were the leading sponsor of the Student Cluster Competition. Competitors DDN, VAST, Quobyte, and BeeGFS all had booths, but only VAST sponsored. Curiously, Pure and Scality, which do not big footholds in leadership HPC, did both booths and sponsorship.

These companies who chose not to have a booth still sent people to the conference and were conducting meetings as usual, though. This suggests that there's something amiss with how large companies perceive the return on investment of having a booth at ISC. I don't have any insider knowledge here, but I was surprised by the pullback since ISC has historically been very good at incentivizing attendees to walk through the expo hall by putting it between the technical sessions and the food breaks.

As I walked the exhibit floor, I found that prominent booths spanned the whole HPC stack: software, system integrators, component makers (CPUs, GPUs, HBM and DDR, and SSD and HDD), and datacenter infrastructure were all exhibiting. The most eye-catching booths were those with big iron on display: HPE/Cray had a full EX4000 cabinet and CDU on display, and there were a few Eviden BullSequana nodes floating around.

Sadly, though, there were no full BullSequana X3000 racks on display. I've still never seen one in real life.

Infrastructure companies like Motivair (who manufactures the CDUs for Cray EX) and Rittal (which I know as a company that manufactures racks) also had big liquid-liquid head exchangers on display with shiny steel piping. Here's a smaller version of the Cray EX CDU that Motivair was displaying:

I got to chatting with some good folks at Motivair, and I learned that the 1.2 MW variant that is used with Cray EX has a 4" connection--the same size as the water main in my coop. Since I recently helped with the replacement of my building's water main, this led me down a rabbithole where I realized that the flow rates for this CDU is roughly the same as my apartment building too, which is to say, a single Cray CDU moves as much fluid as a 55-unit apartment building. Incidentally, a single Cray EX cabinet supports roughly the same electrical capacity as my 55-unit building too--I am in the process of replacing our 1,200 A service panel, which comes out to about the same 400 kVA as fully loaded EX.

Aside from the Cray cabinets and CDUs, which are no longer new to ISC, I couldn't put my finger on any particularly outstanding booths this year though. The exhibit felt like a sea of smaller companies, none of which really grabbed me. This isn't to say that big vendors were wholly absent though. Despite not having booths, all three big cloud providers threw parties during the week: AWS and NVIDIA teamed up on a big party with over a thousand registrants, while Google and Microsoft held smaller parties towards the end of the week. HPE also threw a lovely event that was off the beaten path along the Elbe, resulting in a less-crowded affair that made it easy to catch up with old friends.

I may be reading too much into this year's exhibit, but it felt like ISC might be transforming into an event for smaller companies to gain visibility in the HPC market, while larger companies apply their pennies only in the parts of the conference with the highest return. Whether a company chose to have a booth, sponsor the conference, and/or throw a party seemed to defy a consistent pattern though, so perhaps other factors were at play this year.

Cloud, or lack thereof

Because I work for a large cloud service provider, I attended as many cloud HPC sessions as I could, and frankly, I was disappointed. The clear message I got by the end of the week was that Europe--or perhaps just ISC--doesn't really care about the cloud. This is quite different from the view in the US, where the emergence of massive AI supercomputers has begun to shift opinions to the point where the successor to the Frontier supercomputer at OLCF might wind up in the cloud. I suppose cloud is a lot less attractive outside of the US, since all the major cloud providers are US corporations, but the way in which cloud topics were incorporated into the ISC program this year sometimes felt like a box-checking exercise.

For example, I attended the BOF on "Towards a Strategy for Future Research Infrastructures" which I expected to be a place where we discussed the best ways to integrate traditional HPC with stateful services and other workflow components. While cloud was mentioned by just about every panelist, it was almost always in a throwaway statement, lumped in with "the edge" or cited as a vague benefit to "new workflows and interactive analysis" with no further detail. One speaker even cited egress fees as a big challenge which, to me, means they haven't actually talked to a cloud provider in the last five to ten years. If egress fees are what stop you from using the cloud, you're talking to the wrong account team.

I get it though; there are times where cloud often doesn't offer enough obvious benefit for HPC to justify the effort required to figure it out. In those cases, it's incumbent on cloud providers to provide a better story. But I was also disappointed by the invited session called "Bridging the Gap: HPC in the Cloud and Cloud Technologies in HPC," which I hoped would be the place where cloud providers could make this case. Instead, only two of the three CSPs were even invited to speak, and it was clear that the speakers did not all get the same assignment with their invitations. Granted, the CSP for whom I work was the one not invited (so I came in a little biased), but I was surprised by how differently each speaker used their time.

Dr. Maxime Martinasso from CSCS gave a talk from the perspective of trying to add cloud-like capabilities to a supercomputer, which is a recurring pattern across a number of sites (including many in the US DOE) and projects. He explained the way they're creating an infrastructure-as-code domain-specific language that sits on top of Alps, their Cray EX system, to give users the ability to bring their own software stacks (all the way down through Slurm) to the supercomputer. It was clearly a ton of work on CSCS's part to develop this capability, and yet the talk's "future work" slide contained a bunch of features which those of us in the cloud would consider "P0"--priority zero, or essential for a minimum viable product.

By the end of Martinasso's talk, I realized that CSCS's perspective is that, unlike commercial cloud, these cloudy features aren't P0; having a supercomputer on the floor is. He made the case that CSCS has a need to explore diverse computing architectures and accelerators (as evidenced by the five different node types in Alps!), and putting them all on a single RDMA fabric isn't something any cloud provider will do. As a result, adding any new cloud-like capability to the heterogeneous supercomputer is just gravy, and the fact that true cloud is more "cloudy" than Alps is irrelevant since the cloud will never support the intra-fabric heterogeneity that Alps does.

The other two speakers represented big cloud providers, and their talks had a bit more product pitch in them. One speaker talked through the challenges the cloud is facing in trying to fold supercomputing principles into existing cloud infrastructure (a theme I repeated in my talk later in the week) before talking about specific products that have arisen from that. It touched on some interesting technologies that the HPC world hasn't yet adopted (like optical circuit switching--super cool stuff for programmable fabrics), and I learned a few things about how that provider might bring new HPC capabilities to the table for specific workloads.

The other speaker, though, presented a textbook pitch deck. I've give almost the same exact presentation, down to showing the same sort of customer stories and product comparison tables, during customer briefings. Execs in the audience would eat it up while engineers' eyes would glaze over, and having to do that song and dance is partly why I didn't make it as a product manager. I was incredulous that such a presentation was an invited talk at one of the most prestigious HPC conferences in the world.

This is not to say I was mad at the speaker. He did exactly what one would expect from a leader in the sales side of an organization, hitting all the notes you'd want in a textbook pitch aimed at the C-suite. Rather, I was disappointed by the choice by the session organizers; when you invite someone whose job is driving business at one of the largest cloud providers to speak, you should fully expect a broad and salesy presentation. I don't think it's a stretch to say that most ISC attendees aren't looking for these sorts of high-level talks designed for enterprise decision-makers; they want insight and technical depth.

Was I miffed that a competitor got to give a twenty-minute sales pitch during a session at which I wasn't invited to speak? Absolutely. And do I think I could've given a talk that even the most ardent cloud-hater would find something interesting in it? Probably. But since that didn't happen, the best I can do is complain about it on the Internet and hope that next year's program committee puts more care into organizing an invited speaker session on cloud and HPC.

Thankfully, I was given the opportunity to talk a little about my work at the SuperCompCloud workshop on Friday. That workshop felt like what the "Bridging the Gap" invited session should've been, and there were roughly equal parts of presentations on adding cloud-like features to their HPC infrastructure and adding HPC-like features to cloud infrastructure. From my perspective, the workshop was great; I got to see how traditional HPC centers are adopting cloud practices into their operations, and I could explain how we overcame some of the challenges they're facing in Azure. But to my point at the outset of this section--that Europe doesn't really care about the cloud--the majority of speakers at SuperCompCloud were American.

Parting thoughts

As I said at the outset, there were way more sessions that I missed than I attended. In addition, a lot of the big headlines of the week were coincident with, not made at, the conference. A few noteworthy announcements during the week that I won't go into detail about include:

- £750M was awarded to EPCC to deploy what sounds like the UK's first exascale system. This announcement's overlap with ISC was a total coincidence, so EPCC didn't have many details to share.

- The Ultra Ethernet Consortium announced the long-awaited version 1 of its spec. I'm not sure how relevant this is to HPC yet, but given how many networking talks compared themselves against InfiniBand, I think there's a lot of appetite for a high-performance, non-proprietary alternative.

- Sadly, HPC_Guru announced his retirement mid-week as well. It's not clear this was deliberately timed with ISC, but it was acknowledged on the big stage during the ISC closing statements and resulted in a lot of recognition online. I credit HPC_Guru, whoever he is, with a lot of the success I've enjoyed in my career, as he amplified my voice as far back as 2009 when I first started on Twitter. Maybe with his retirement, I should try to do for others what he did for me.

And along the lines of reflecting back over the years, this was ISC's 40th anniversary, and the organizers had a few wonderful features to commemorate the milestone. Addison Snell organized a panel where a variety of attendees got to discuss the impact that the conference has had on them over the past 40 years, and I was delighted to find that I was not the only person to reflect back on how ISC has shaped my career. As critical as I can be of specific speakers and sessions when I write up these notes, I do hope it goes without saying that I wouldn't bother doing all this for a conference that wasn't deeply engaging and rewarding to be a part of.

Going back to this year's theme of connecting the dots, I think it's apt. Some ways in which HPC connected dots at ISC this year were obvious; the conference brought together people with a common interest in high-performance computing from across 54 countries and seven continents this year. But this year's conference also made it clear that the role of HPC going forward may be connecting the dots between different technologies being developed for AI, cloud, enterprise, and other markets and the problems in scientific computing that need to be solved.

The latest and greatest Blackwell GPUs barely registered at ISC this year, and the HPC community seems OK with that now. Instead of the focus being on the absolute top-end in high-performance accelerators, HPC's focus was on connecting the dots between last generation's GPUs and today's grand challenges in science. Instead of showcasing the newest innovations in secure computing in the cloud, HPC's focus was in connecting the dots between a few relevant pieces of zero trust and big-iron on-prem supercomputers.

HPC has always been about figuring out ways to use stuff invented for someone else to solve scientific challenges--connecting the dots. Beowulf clusters started that way, GPGPU computing started that way, and emulating DGEMMs (and other primitives) on AI accelerators will probably follow the same pattern. But different nations are drawing different lines between the dots; while the US might draw a shorter line between commercial cloud and HPC at scale, Europe is drawing shorter lines between HPC for scientific computing and HPC for sovereign AI.

If we accept that connecting the dots may be where the HPC community can make the most impact, then it's fitting that ISC chose to carry forward the theme of "connecting the dots" into ISC'26. This break from the tradition of introducing a new tagline each year suggests that, at times, optimizing what we already have can take us further than than pursuing something completely new. After 40 years, ISC remains not only a showcase of innovation, but a reflection of how the HPC community (and its role in the technology landscape) is evolving. If we continue to embrace this theme of stitching together breakthroughs instead of spotlighting them individually, the HPC community is likely to be more relevant than ever alongside--not in spite of--the overwhelming momentum of hyperscale and AI.